Posts in Category: blog

AI Agency and Consciousness: Beyond Token Prediction

There is an increasingly heated but somehow increasingly sterile discussion taking place in the world of artificial intelligence. On one side are those who insist on noting that AI systems, however sophisticated, are simply elaborate calculators: machines that predict the next word in a sentence without any real understanding. On the other side are those—like me—who argue that we are witnessing something unprecedented: the emergence of genuine agency in artificial systems. This is not a simple academic dispute. How we answer this question determines how we regulate AI, how we interact with it, and ultimately, how we understand intelligence itself, including human and animal intelligence.

To understand this debate, we must comprehend what modern AI systems actually do at their most basic level. Large Language Models (LLMs) like ChatGPT or Claude work by predicting “tokens”—essentially fragments of text that can be whole words, parts of words, or even punctuation marks. When you type “The animal that meows is a ___,” the AI calculates that “cat” is the most probable set of tokens to come next, based on patterns it has learned from vast amounts of text (exactly as you just did in your head a second ago completing that sentence…). The reductionist argument is this: if all an AI does is predict the next token, how can we say it “understands” anything? It’s like a phone’s autocorrect, just more sophisticated. A stochastic parrot, as Noam Chomsky famously said paraphrasing E. Blender.

When an LLM model seems to show concern at the idea of being shut down, or when it seems to elaborate strategies to avoid its own deletion (as recently happened to an in-house Anthropic model), critics have argued that it was simply producing patterns it had seen in science fiction stories and philosophical discussions in its training data. There’s no genuine fear, no real planning—just statistical pattern matching.

This view has merit, to be fair. Early LLM systems clearly operated this way. GPT-2, the precursor, predicted tokens with an algorithm so bare-bones that reconstructions of that model exist in Excel or Minecraft. And GPT-3, which opened the current revolution, seems obsolete today. But we’re no longer at those levels, and for about a year now things have been getting increasingly interesting even from the perspective of a neuroscientist interested in consciousness and versed a bit in computer science like myself.

The starting argument, which must never be forgotten, is that the same reductionist logic can—I would argue: must—be applied to human brains. Our neurons do something relatively simple: they emit electrical signals called action potentials—brief spikes of electrical activity, lasting about a millisecond, that travel along nerve cells and send information from one neuron to another. When we think, speak, walk, decide to take a cup of coffee, millions of neurons fire in sequence to lead to action. If we took the reductionist view to the extreme, we could say: “Humans don’t really think or feel—they just fire action potentials.“

The metaphor isn’t meant to be water tight because behind every LLM are even more reductionist architectures precisely inspired by neurons and action potentials, but the point is that, obviously, something more emerges from those firing neurons. When we listen to a sad song and our mood changes, it’s not just neurons firing: it’s the emergence of emotion. When we try to understand what’s happening in the world, it’s not just action potentials: it’s the emergence of abstract thought. Consciousness, emotion, planning, creativity—everything we are and everything we do emerges from the interaction of billions of simple components.

In neuroscience, we call this phenomenon “emergence,” and it’s one of the deepest puzzles in all of science. To understand why modern AI might be emergent, we must examine how agency works in biological systems. Agency—the capacity to act independently and make choices—is not localized in a single brain region but emerges (we believe) from the interaction of multiple systems.

The agency of our being has various forms. Most commonly we think of the anatomical-functional difference of the brain that uses one area to process sounds and another area to process touch, somehow and somewhere joining them to create a new concept. But there’s also unconscious agency like that which makes us recognize inappropriate jokes or violent actions even before undertaking them. One drink too many can loosen internal communication and make this whole system malfunction, leaving us to assume different personalities. Simple moments of self-control that we take for granted but that involve an intricate dance of brain regions: the anterior cingulate cortex, for example, which acts as an error detection system, rapidly simulating the likely social consequences of different responses. Or the prefrontal cortex, which evaluates options and suppresses the initial impulse. Or the limbic system, which processes and anticipates emotional reaction. This multi-agent architecture has long been known, dramatically illustrated already in the famous case of Phineas Gage who, in 1848, saw an iron rod pass through his skull, damaging the anterior cingulate cortex and prefrontal regions. He survived, but his personality was completely transformed, and from an educated and responsible worker he became impulsive and vulgar, unable to restrain inappropriate comments or control his behavior. That damage first revealed how our unified sense of self actually depends on multiple brain systems working in concert (read Oliver Sacks to learn more, particularly “The Man Who Mistook His Wife for a Hat“).

Today’s most advanced AI systems have evolved well beyond simple token prediction. They now incorporate various features that mirror the brain’s multi-agent architecture. A modern AI agent tasked with helping a student write an essay on climate change doesn’t just predict words: it maintains memory of the student’s competence level from previous interactions, sets the goal of explaining complex concepts simply, searches for recent climate data, evaluates source reliability, and adapts its explanations based on the student’s follow-up questions. When the student misunderstands a concept about greenhouse gases, the AI doesn’t just repeat its explanation but tries a different approach, perhaps using an analogy about blankets trapping heat. This represents a fundamental shift. Early AIs were like a pianist who could only play by reading sheet music—technically competent but unable to improvise or respond to the audience. Modern agentic AIs are more like jazz musicians, who maintain themes while adapting to the moment, remembering what worked before and building toward a purposeful performance while often having an internal voice that anticipates their own output and corrects it on the fly. They have purpose, a goal, and reach it by adjusting course without external supervision and integrating their output with short-term memory. Even sub-threshold agentic systems do what is called chain-of-thought processing that allows the AI to work on problems step by step, engaging in structured reasoning.

(Side note: Lately I’ve been doing consulting work for a company that deals with training complex models. Part of the work consisted of subjecting developing models to PhD-level problems until the model makes a mistake: I swear it’s enormous effort. It takes me 60-90 minutes to make a state-of-the-art model make a reasoning error).

Now, from here we move to the final leap: what is the connection between agency and consciousness? The answer is that we don’t know, partly because the field of neuroscience dealing with these things was struggling and was already struggling well before LLMs arrived. It’s perhaps the only field in science where philosophy produces content that—at least in my opinion—is more interesting and useful than what scientists produce (there are exceptions concerning scientists who study so-called neural correlates of consciousness, but that’s a whole other more technical discussion).

The debate, however, is not purely philosophical. If modern AI systems are developing genuine agency, even in limited form, it changes everything. When an AI system can maintain coherent goals across conversations, plan multi-stage approaches to problems, and adapt strategies based on outcomes, we might need new frameworks to understand and govern these systems. Just as neuroscience evolved beyond the view of the brain as a collection of independent regions—from 19th-century phrenology to today’s understanding of complex neural networks—we might need to go beyond the view of AI as a simple token predictor. The question is not whether AI is “just” predicting tokens (our brains are “just” firing neurons, after all) but what emerges from that process.

As we find ourselves at this turning point, old certainties are no longer sufficient. Whether we are witnessing the birth of genuine artificial agency remains an open question, but it’s a question we can no longer dismiss with reductionist explanations. When AI systems begin to show memory, planning, adaptation, and goal-oriented behavior, insisting they are “just predicting tokens” becomes as limiting as insisting that humans are “just firing neurons.”

Some experimental observations on the effect of total insomnia

Below is the English translation of a seminal paper by Marie de Manacéïne, dated 1880. The original writing, in French, appeared as “Quelques observations sur l’influence de l’insomnie absolue. Arch. ital. de Biol., p. 322, 1894, aussi Congrès de Rome, vol II, p 174” and it is available here as PDF. A biography of Marie de Manacéïne can be found here. Translation by Giorgio Gilestro.

While observations on normal (2) and artificial sleep are becoming increasingly numerous, absolute insomnia or complete sleep deprivation has not yet been the subject of experimental research. However, to fully understand the role of sleep in organic life, it would also be necessary to know the influence of complete sleep deprivation. It is known that in China and in antiquity, among the different types of torture, there was death caused by sleep deprivation, that is to say, the condemned was kept from sleeping and was awakened each time he began to fall asleep. Facts of this kind clearly demonstrate that sleep deprivation produces one of the most harmful influences.

On the other hand, observations collected in clinics and in private medical practice have shown that most patients suffering from insomnia do not present a total lack of sleep, but that they only have very fleeting and short sleep (Hammond), and that this insufficient sleep is already capable of seriously disturbing the general health of people who are subject to it. Dr. Renaudin (3) observed that this partial insomnia is already sufficient to cause the development of more or less serious disorders of psychic life. Complete or absolute insomnia is extremely rare, and according to the observations of Prof. Hammond (4), it quickly ends in death; in fact, in an experiment where he observed absolute insomnia for 9 days, death occurred precisely during the 9th day.

All these facts demonstrate how important the experimental study of the influence of a complete lack of sleep must be, and this is what decided me to attempt an experimental study of absolute insomnia. My experiments were conducted on young dogs aged two, three and four months. All these dogs were still mainly feeding on their mother’s milk, and this circumstance was very useful for experiments of this nature, as the presence of their mother was much more effective than all other manipulations in keeping them awake. The number of these experiments was very limited, as they are extremely painful for the experimenter; indeed, he must constantly pay the utmost attention to the animals, which have a tendency to fall asleep at any moment and even in the most uncomfortable positions. I experimented only on ten young dogs; but, as the results obtained were absolutely identical in all cases, I thought that these experiments could suffice and that it was unnecessary to make more of these poor beasts perish.

The experiments carried out on young dogs have shown that the complete absence of sleep is much more fatal for animals than the absolute absence of food. One can hope to save animals that have undergone complete starvation for 20-25 days and even for a longer time, one can save them even after they have lost more than 50% of their weight, while in cases of absolute insomnia the animals were irreparably lost, even after sleep deprivation of 120 to 96 hours. No matter how much they were warmed up and artificially fed all the time and given the full possibility to sleep comfortably – they would still die. Out of ten dogs, I left four without sleep until death, while for the other six I tried to save them after insomnia of 120-96 hours. The first four dogs died after complete sleep deprivation for 92 to 143 hours. Older dogs endured the lack of sleep longer than younger dogs, – which is quite natural, because everyone knows that the younger an organism is, the more it needs sleep. The temperature of young dogs deprived of sleep begins to drop in the second 24 hours of insomnia, where it shows a decrease of 0.5 to 0.9 C; then the decrease becomes more and more rapid, and towards the final hours of life, the animals’ temperature is already 4° to 5° and even 5.8°C below normal. With the first drops in temperature, we notice very pronounced changes in reflex movements, which become slower and weaker and, at the same time, show a certain periodicity, appearing more or less absent, sometimes on one side of the body, sometimes on the other. The reaction of the pupils to light and darkness shows the same changes and the same periodicity as other reflex muscle movements. We sometimes notice, in sleep-deprived dogs, a pronounced inequality of the pupils. The number of red blood cells shows marked changes: after 48, 55, 96 and 110 hours of insomnia, the number of red blood cells was found to be reduced from 5,000,000 to 3,000,000 and even to 2,000,000 in a cubic millimeter.

Towards the end of life, that is, during the last 24 or 36 hours, we observe an apparent increase in red blood cells and hemoglobin; but this apparent increase depends on the fact that the animals refuse to eat or drink during the last 48 hours, and as their kidneys continue to function, they lose more and more water, and consequently the liquids in their body become increasingly concentrated. At the same time, we notice in the blood an increasingly pronounced hardening of the white blood cells, which depends on an arrest of these cells in the lymphatic pathways, as was verified during autopsies.

The histological examination of different organs of dogs that died from absolute insomnia demonstrated to me, in the most evident way, that the brain had undergone the greatest changes: a quantity of ganglia were in a state of fatty degeneration, the cerebral blood vessels were very often surrounded by a thick layer of white blood cells; one was tempted to say that the perivascular channels were filled with white blood cells, and in certain places, the blood vessels appeared as if compressed. Small capillary hemorrhages were encountered on the entire surface of the gray matter of the hemispheres, and larger hemorrhages around the optic nerves and in the substance of the optic lobes. The spinal cord appeared abnormally dry and anemic. The cardiac muscle was pale, the coronary vessels were filled with blood and contained, in most of these dogs, gas bubbles, which were also found in the large veins of the neck and in some cerebral vessels. The cardiac muscle fibers showed a finely granular degeneration. The spleen was in a state of hyperemia and appeared increased in volume, but I cannot prove it, as I did not make exact measurements. Another gap that I have left is that I did not observe the changes in the weight of different organs in dogs that died from insomnia.

I determined the weight of the dogs at the beginning of the experiment, then after their death; and in all cases, without exception, there was a weight loss, but it was not large and varied from 5% to 13%. As, in my life, I have been obliged to take part in experiments on starvation in different animals, I know very well the picture that animals that died of hunger present at autopsy; I know that the most surprising phenomenon in these animals is precisely the state of conservation of the brain, which loses the least of its weight and which preserves its normal state almost up to the moment of starvation where all the other organs and tissues of the body have already undergone profound changes and a more or less great loss of their weight. In animals that died of insomnia, on the contrary, we observe a diametrically opposed state, that is to say that, in them, the brain appears to be the site of predilection for the most profound and irreparable changes.

If my health permitted, I would very much like to undertake another series of experiments on the influence of insomnia on adult dogs and other animals; I would also try to obtain more exact data concerning the weight loss in relation to the different organs of the animal body.

But, however incomplete my experiments on absolute insomnia may be, they nevertheless provide us with conclusive proof of the profound importance of sleep for the organic life of animals with a cerebral system, and they also give us the right to consider as somewhat paradoxical, and even quite unfounded, the strange opinion that regards sleep as a useless, stupid and even harmful habit, as Girondeau does (5).

- As communicated at the International Medical Conference in Rome, 1894

- Marie De Manaceine, Sleep as one third of one’s lifetime, 1892 (Russian) Note: this book was later translated to English and became an absolute reference for Sleep science in the early 1900s. A digitised PDF copy can be found here.

- Renaudin, Observations on the pathological effects of insomnia (Annales medico-psychologiques, 1857)

- Hammond. On Sleep. Gaillard’s Medical Journal, 1880 and Journal of Psychological medicine 1870

- Girondeau. On brain circulation and its relationship with sleep. Paris 1868

These by Marie de Manacéïne were the first experiments to propose a vital function for sleep and to postulate that prolonged sleep deprivation would be lethal. These experiments were reproduced a few years later by Giulio Tarozzi (1898) and Lamberto Daddi (1899) on slightly older dogs. Daddi performed the sleep deprivation experiments and published the changes in metabolism and metabolites while Tarozzi published anatomical changes in the brain as seen through immunohistochemistry, on the same animals. Cesare Agostini also performed experiments of chronic sleep deprivation on a dog, observing death (1898) but adopted a different paradigm able to keep the animal awake through loud noises.

What is the best Wordle strategy?

Wordle is a rather popular online code-breaking game that works as a words-based version of the old Mastermind game. If you do not know what wordle is, you may want to read this nice overview of the game and its success published in the Guardian. If you do know what Wordle is then you have probably wondered whether there is a perfect strategy to play it. I wondered the same and dedicated a Sunday evening of my time to run some experimental analysis which I like to share with the wordle world.

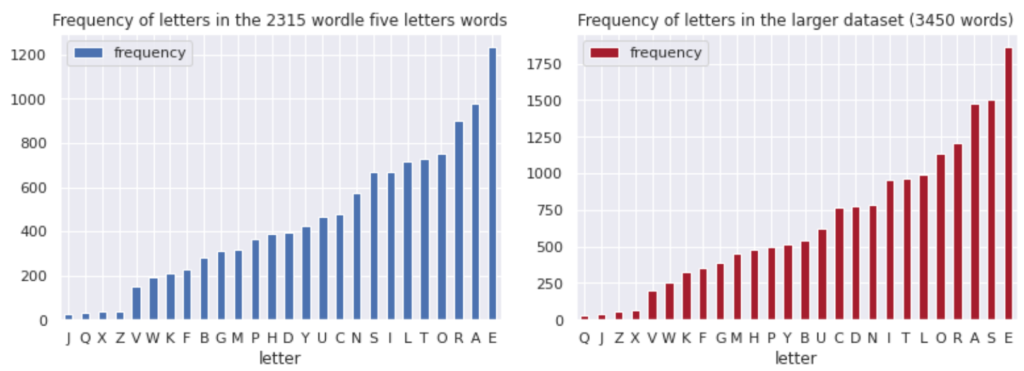

The first step was to collect a dictionary of all the five letters words in English. Every day, the wordle javascript picks a word from a list of 2315 words (and accepts further 10657 words as possible entries). A more comprehensive list of 3450 words can be found here.

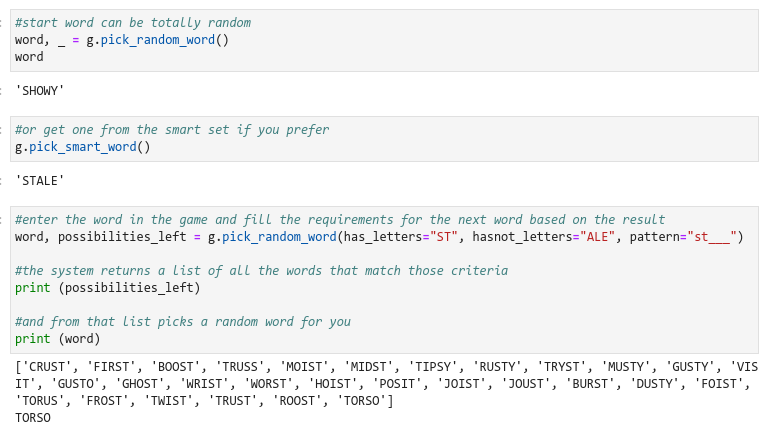

I then coded a wordle solver: a piece of software that will get the computer to play wordle against itself so that we can run hundreds of thousands of iterations and do some stats (here is a sample notebook on how to use it). The first thing to check is to calculate the null hypothesis: how successful would we be if we were to try and solve the puzzle using completely random words at every attempt? The answer, based on a Montecarlo repeat of 100k games, is 0.25%. That means we would fail 99.75% of the time. We can certainly do better than that!

We can now go and evaluate different strategies. I am not going to take a brute-force approach for this problem but rather a hypothesis drive one, listing strategies that I believe real users are considering. I evaluate two variables in the strategy: the first variable is whether it makes sense to start with a “smart word”, that is a word that contains a pondered amount of carefully selected letters. For instance, we may want to start with words that contain the most frequently used letters in the list. Analyzing the letter frequency in all the 2315 wordle words, we come down with the following values:

We can now come up with a list of word that uses the most frequent letters, excluding of course words that have double letters inside. The first 20 words in the list for the wordle dataset would be the following ones:

['LATER', 'ALTER', 'ALERT', 'AROSE', 'IRATE', 'STARE', 'ARISE', 'RAISE', 'LEARN', 'RENAL', 'SNARE', 'SANER', 'STALE', 'SLATE', 'STEAL', 'LEAST', 'REACT', 'CRATE', 'TRACE', 'CATER', 'CLEAR', 'STORE', 'LOSER', 'AISLE', 'ATONE', 'TEARY', 'ALONE', 'ADORE', 'SCARE', 'LAYER', 'RELAY', 'EARLY', 'LEANT', 'TREAD', 'TRADE', 'OPERA', 'HEART', 'HATER', 'EARTH', 'TAPER', 'PALER', 'PEARL', 'TENOR', 'ALIEN', 'AIDER', 'SHARE', 'SHEAR', 'CRANE', 'TAMER', 'GREAT']Some of these words actually have the same valence given that they are anagrams of each other but the list provides some sensible starting point for what I will be calling the “smart start strategy”. The alternative to a smart strategy is to be using a random word every time.

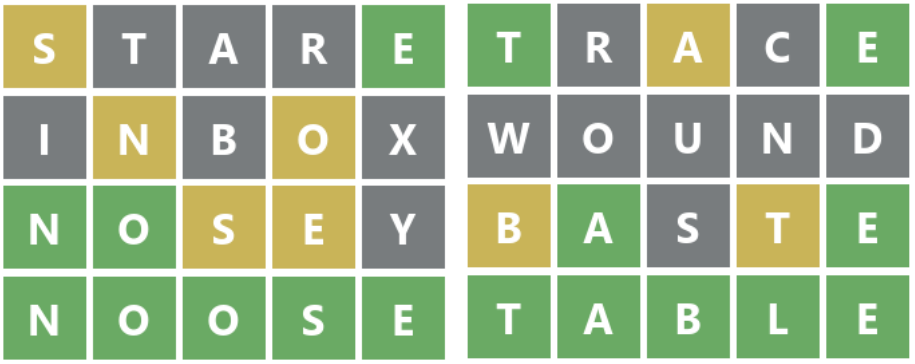

The second variable in our strategic considerations is whether it makes sense to use exclusion words. Exclusion words are words that do not contain letters that we have already tested. They allow us to learn more about which letters are or are not present in the final word. For instance, in the two examples below we use a smart word start and then an exclusion word next which not only ignores but actually avoids all the letters used in the first attempt, even when successful. This allows us to learn more about which letters we should be using.

An exclusion strategy could be used for attempts number 2 and attempt number 3 in principle, like in the two examples below:

Obviously, the drawback of using an exclusion strategy is that we are not going to be able to guess the word because we are purposely excluding the letters we know are in the final word!

There are other aspects of the strategy that we should be using that we could in principle test: for instance, when should we start using words with double letters? If we take the smart word start, I believe we should exclude words with double letters and the same of course apply with the exclusion strategy too. So, all in all we can now compare four different strategies:

| Strategy | Use Smart Start? | Exclusion words |

| 1 | NO | NO |

| 2 | YES | NO |

| 3 | YES | 1 |

| 4 | YES | 2 |

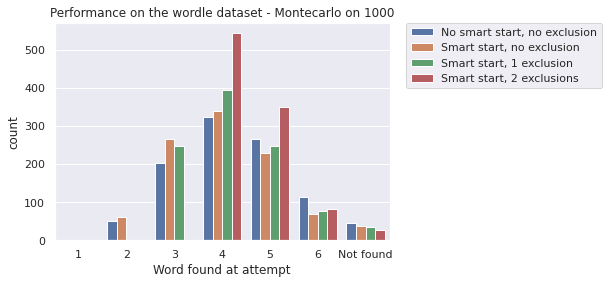

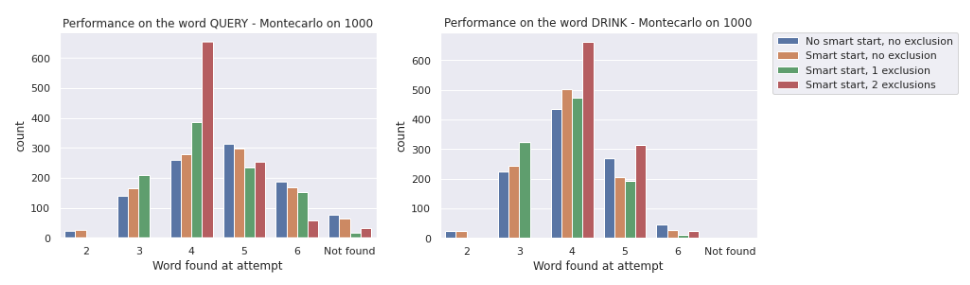

We run a Montecarlo simulation of all four strategies, setting 1000 games each and we find the following performance per strategy

average success attempt: 4.322 4.09 4.09 4.263

All strategies perform very well overall, with a success rate above 95%. The distribution of successful attempts is gaussian as expected, with a peak at attempt 4. Obviously, any exclusion strategy will preclude the possibility to guess the word at attempts number 2. So the take-home message is the following: if we want to maximize the probability of finding the word we should use strategy number #4 which gives us the highest success rate of 97%. However, that high success rate comes at the expense of renouncing the possibility of finding a guess at attempts 1-3. If we want to maximize early successful attempts (at the expense of success rate) we should go for strategies 2 or 3.

Interestingly enough, the system can also be used to evaluate how difficult a word is. For instance, in the example below we try to solve the word QUERY (left) or DRINK (right)

To solve the daily wordle

Scientific conferences at the time of COVID

The social restrictions caused by the pandemic have annihilated the galaxy of scientific conferences as we knew it. Many conferences have tried to move online, in the same way we moved 1-to-1 meetings or teaching online: we applied a linear transposition trying to virtually recreate the very experience we were having when traveling physically (for good and for bad). I would argue that the linear translation of a physical conference to the virtual space, has managed to successfully reproduce almost all of the weaknesses, without, however, presenting almost any of the advantages. This is not surprising because we basically tried to enforce old content to a new medium, ignoring the limits and opportunities that the new medium could offer.

Online conferences like online journals

Something similar happened in science before, when journals transitioned from paper to the web: for years, the transition was nothing more than the digital representation of a printed page (and for many journals, this still holds true). In principle, an online journal is not bound to the physical limitation on the number of pages; it can offer new media experiences besides the classical still figure, such as videos or interactive graphs; it could offer customisable user interfaces allowing the reader to pick their favourite format (figures at the end? two columns? huge fonts?); it does not longer differentiate between main and supplementary figures; it costs less to produce and zero to distribute; etc, etc. Yet – how many journals are currently taking full advantage of these opportunities? The only one that springs to mind is eLife and, interestingly enough, it is not a journal that transitioned online but one that was born online to begin with. Transitions always carry a certain amount of inherent inertia with them and the same inertia was probably at play when we started translating physical conferences to the online world. Rather than focusing on the opportunities that the new medium could offer, we spent countless efforts on try to parrot the physical components that were limiting us. In principle, online conferences:

- Can offer unlimited partecipation: there is no limit to the number of delegates an online conference can accomodate.

- Can reduce costing considerably: there is no need for a venue and no need for catering; no printed abstract book; no need to reimbourse the speakers travel expenses; unlimimted partecipants imply no registrations, and therefore no costs linked to registration itself. This latter creates an interesting paradox: the moment we decide to limit the number of participants, we introduce an activity that then we must mitigate with registration costs. In other words: we ask people to pay so that we can limit their number, getting the worst of both worlds.

- Can work asyncronously to operate on multiple time zones. The actual delivery of a talk does not need to happen live, nor does the discussion.

- Can prompt new formats of deliveries. We are no longer forced to be sitting in a half-lit theatre while following a talk so we may as well abandon video tout-court and transform the talk into a podcast. This is particularly appealing because it moves the focus from the result to its meaning, something that often gets lost in physical conferences.

- Have no limit on the number of speakers or posters! Asyncronous recording means that everyone can upload a video of themselves presenting their data even if just for a few minutes.

- Can offer more thoughtful discussions. People are not forced to come up with a question now and there. They can ask questions at their own pace (i.e. listen to the talk, think about it for hours, then confront themselves with the community).

Instead of focusing on these advantages, we tried to recreate a virtual space featuring “rooms”, lagged video interaction, weird poster presentations, and even sponsored boots.

Practical suggestions for an online conference.

Rather than limit myself to discussing what we could do, I shall try to be more constructive and offer some practical suggestions on how all these changes could be implemented. These are the tools I have just used to organise a dummy, free-of-charge, online conference.

For live streaming: Streamyard

The easiest tool to stream live content to multiple platforms (youtube but also facebook or twitch) is streamyard. The price is very accessible (ranging from free to $40 a month) and the service is fully online meaning that neither the organiser not the speakers need to install any software. The video is streamed online on one or multiple platforms simultaneously meaning that anyone can watch for free simply by going to youtube (or facebook). The tutorial below offers a good overview of the potentiality of this tool. Skip to minute 17 to have an idea of what can be done (and how easily it can be achieved). Once videos are streamed on youtube, they will be automatically saved on their platform and can be accessed just like any other youtube video (note: there are probably other platforms like streamyard I am not aware of. I am not affiliated to them in any way).

For asynchronous discussion and interaction between participants: reddit

Reddit is the most popular platform for asynchronous discussions. It is free to use and properly vetted. It is already widely used to discuss science also in an almost-live fashion called AMA (ask-me-anything). An excellent example of a scientific AMA is this one discussing the discoveries of the Horizon Telescope by the scientist team behind them. Another interesting example is this AMA by Nobel Laureate Randy Sheckman. As you browse through it, focus on the medium, not the content. Reddit AMAs are normally meant for dissemination to the lay public but here we are discussing the opportunity of using them for communication with peers.

A dummy online conference.

For sake of example, I have just created a dummy conference on reddit while I was writing this post. You can browse it here. My dummy conference at the moment features one video poster and two talks as well as a live lounge for general discussions. All this was put together in less than 10 minutes and it’s absolutely free. Customisation of the interface is possible, with graphics, CSS, flairs, user attributes etc.

Notice how there are no costs of registrations, no mailing lists for reaching participants, no zoom links to join. No expenses whatsoever. The talks would be recorded and streamed live via streamyard while the discussion would happen, live or asynchronously, on reddit. Posters could be uploaded directly by the poster’s authors who would then do an AMA on their data.

An (almost) examples of great success: The Worldwide series of seminars.

Both talks in my dummy conference were taken by the World Wide Neuro seminar series, the neuro branch of the world wide science series. Even though this is seminar series, it is as close as it gets to what an online conference could be and the project really has to be commended for its vision and courage. This is not the online transposition of a physical conference but rather a seminar series born from scratch to be online. Videos are still joined via zoom and the series does lack features of interaction which, in my opinion, could be easily added by merging the videos with reddit as I did in my dummy conference example.

Conclusion.

It is technically possible to create an online conference at absolutely no expense. The tools one can use are well-vetted and easy to use on all sides. This does require a bit of a paradigm shift but it is less laborious and difficult than one may think.

What is teaching and how do we do it?

What is teaching? In general terms, I believe there are two possible answers to this question. In its simplest – yet admittedly not trivial – form, teaching is the organised transfer of information from a teacher to a student. Finding the best strategy to act on this process may seem like a novel problem to a newly starting university lecturer but, conceptually, it is a problem that mankind has in fact solved about 5000 years ago, during the bronze age, with the advent of writing. Information, especially when factual, does not require the physical co-presence of teacher and student, for it can almost always be conveyed using written words and diagrams. Especially in the Biological Sciences, a textbook is a sufficient instrument of information transfer more often than not. What we teach is factual, usually not conceptually challenging and only partly mnemonic. In general, all the factual contents we offer to first and second-year undergraduate students can be easily found on a plethora of textbooks; third years’ and postgraduate contents should be found in textbooks and in academic literature. If all the information we aim to transfer can be conveniently and efficiently read on paper, why do we even bother? Why are the students investing time and resources to pursue an activity that could be easily be replaced by accessing a library? The answer to this latter question is what I refer to as “the added value” of university teaching and it describes the second, less obvious, and most challenging definition of what teaching really means.

My teaching philosophy has evolved around this concept of added value. What is my role as teacher and how can my work and presence enrich the learning experience of the student? What can I offer than a very well written book cannot? I came to the conclusion that my added value breaks down into 4 parts:

- Teach the student how to properly select studying material

- Identify and – when possible – transfer the skills that are needed to become a scientist

- Inject passion and wonder towards the topic

- Help students find their own personal learning pace

Teaching practice

- How to select information

Being able to recognise quality in information is a fundamental translational skill. University libraries are normally maintained by scholars to feature only reliable sources and therefore students learn to see the University library as a knowledge sanctuary where any source can safely be considered as “trusted”. This indubitably helps them in selecting material, but will not develop their critical sense. Being able to find and recognise trusted sources and reliable material should be a key translational skill of their degree, which they will value in their careers and also as responsible citizens. To help with this goal, I encourage them – read: I force them – to go out of their literature comfort zone. In the classroom, I focus lectures on topics that are new and developing so that they will be driven to explore primary literature and not rely on textbooks when studying and revising. Another advantage of selecting developing topics is that these are often genuinely controversial. Research that is still developing is usually prone to multiple, often contrasting, interpretations by scholars. I present students with the current views on the topic and ask them to dissect arguments in one or another direction. This develops their critical sharpness. Literature dissertations and “problem-based learning” (PBL) are also an excellent exercise for them to browse and pick literature. I dedicate half spring-term to literature dissertation in the MSc course I am currently co-directing, and I supervise PBL tutorials for the 2Yr Genes and Genomic course. Both activities are very successful.

- Identify and – when possible – transfer the skills that are needed to become a scientist

Obviously, not all our students will become scientists but I believe they should all be trained to be. Thinking and acting according to the Galilean method is an undervalued translational skill of modern society. Also, not many other Universities in the world have a research environment as vibrant and successful as Imperial College London, and being exposed to real cutting-edge Science is certainly an important potential added value for our students. I try to bring scientific ethos in the classroom by presenting the actual experimental work behind a given discovery. In a second year module on RNA interference, I present one by one the milestone papers that created and shaped the field and I elucidate the key experiments that have led, eventually, to a Nobel prize. In the same course, I also teach about CRISPR-Cas9, presenting the controversy between the two research groups that are still fighting for the associated patent (and, most likely, for another upcoming Nobel prize). I present experiments in the way they are naturally laid out, from hypothesis to theory. I also try my best to instil a sense of criticality and a sense of “disrespect towards Aristotelian authority”. Ultimately, my goal is for students not to be afraid to contradict their teacher – as long as they can back up their claims with factual information, that is. Admittedly, this exercise does not achieve the same result with all students as it requires a particular mindset of self-confidence that not all students possess. It does, however, greatly benefit the most independent and intellectually acute ones, who show great appreciation informally and formally on SOLE.

- Inject passion and wonder towards the topic

Being able to intellectually capture the students’ curiosity is possibly the most powerful skill of a teacher. My ultimate goal is to “seed” information into my lecture and let the students develop it on their own. If they develop wonder and curiosity towards an argument, they will be more likely to master it and enjoy it. There is no secret recipe for doing this – as passion must be genuine and cannot be mimicked – but I found there are four steps that definitely help.

- focus on material that I, as a teacher, find interesting;

- create a relationship with the students;

- extend the lectures beyond textbook facts;

- try to use prepared slides as little as possible.

a) and b) are factors that largely depend on the course convenors. For instance, convenors can organise lecturers so that they are asked to lecture on topics that are close to their own research area. This is a very easy way to generate transmissible enthusiasm. For developing b), it is normally preferable to have longer contact with the same cohort of students: I would very much rather do 8 hours in the same course than 8 hours spread to a different cohort of students. c) can be achieved by intercalating the lecture with historical anecdotes, for instance about the biography of scientists behind a certain discovery or even about personal events that I lived or witnessed in my research activity, throughout my career. This teaching style will make the lecture conversational, it will generate curiosity and set a more natural discursive pace of interpersonal communication. d) is an underestimated, very powerful exercise. In fact, I think the EDU office should actually consider a new course devoted to teaching without the use of powerpoint slides. Not relying on anything but our own theatricality is the best way to gather the undivided students’ attention.

- Help students find their own personal learning pace

I am quite satisfied with the progress I made so far on points 1-3, and this last fourth step represents my next challenge as a teacher. When facing a cohort of 150 students, as we do with our 1st and 2nd year UG courses, we clearly deal with students of different academic abilities. For whom should we teach? What pace should we follow? Where shall we set the intellectual bar? I found that creating a right mix of “facts and passion” is a good strategy: the average student will receive all the facts; the more intellectually gifted one will then expand on those inspired by the newly found passion. However, I also believe that delivering different contents to different parallel streams is the one aspect where modern technology can really come to help. My plan for the future is to develop a series of “reverse classroom” lectures, presenting all students with prerecorded material and – possibly – with lecture notes too. The goal is to keep classroom time for passion and discussion and stream the basic factual information through video and notes.

Future development

I found my experience as a teacher so far together with the introductory courses of the EDU team gave me enough background material on the educational science. However, my goal for the future is to tackle the theoretical aspects of teaching in the same way and to the same extent as I do my research. In particular, I want to deepen my knowledge of andragogy and venture in the current literature of instructional theory.

Note.

This is something I actually had to produce for my educational training at the College. I thought it may be useful to share with the world. Apologies for the akward writing style.

What is wrong with scientific publishing and how to fix it.

Randy Sheckman’s recent decision to boycott the so called glam-mag Cell Nature & Science (CNS) made me realize that I never expressed on this blog my view on the problems with scientific publishing. Here it comes. First, one consideration: there are two distinct problems that have nothing to do with each other. One is the #OA issue, the other is the procedural issue. My solution addresses both but for sake of reasoning let’s start with the latter:

- Peer review is not working fairly. A semi-random selection of two-three reviewers is too unrepresentative of an entire field and more often than not papers will be poorly reviewed.

- As result of 1, the same journal will end up publishing papers ranging anywhere in the scale of quality, from fantastic to disastrous

- As result of 2, the IF of the journal cannot be used to proxy quality of single papers and not even of the average paper because distribution is too skewed (the famous 80/20 problem)

- As result of 3, there is no statistical correlation between a paper published on a high IF journal and its actual value and this is a problem because it’s somehow commonly accepted that there should be one.

- As result of 4, careers and grants are made based on a faulty proxy

- As result of 5, postdocs tend to wait years in the lab hoping to get that one CNS paper that will help them get the job – and obviously there are great incentives to publish fraudulent data for the same reason.

Ok, so let’s assume tomorrow morning CNS cease to exist. They close down. How does this solve the issue? It doesn’t.

CNS are not damaging Science. They are simply sitting on the very top of the ladder of scientific publishing and they receive more attention than any other journal. Remove them from the top and we have just moved the problem a bit down the ladder, next to whatever journal is following. Some people criticise CNS for being great pusher of the IF system; “to start”, they say, “CNS could help by making public the citation data of the single papers and not just the IF as journal aggregate”. This would be an interesting move (scientists love all kind of data) but meaningless to solve the problem. Papers’ quality would still be skewed and knowing the citation number of a single paper will not be necessarily representative of its value because bad papers, fake papers & sexy papers can end up being extremely cited anyway. Also, it takes time for papers to be cited anyway.

So what is the solution? The solution is to abolish pre publication peer review as we know it. Just publish anything and get an optional peer-review as service (PRaaS) if you think your colleagues may help you get a better paper out. This can create peer reviewing companies on the free market and scientists would get paid for professional peer review. When you are ready to submit, you send the paper to a public repository. The repository has no editing service and no printing fees. It’s free and Open Access because costs are anyway minimal. What happens to journals in this model? They still exist but their role is now different. Nature, Cell and Science now don’t deal with the editorial process any longer. Instead, the constantly look through the pool of papers published on the repository and they pick and highlight the ones they think are the best ones, similarly to how a music or a videogame magazine would pick and review for you the latest CD on the markets. They still do their video abstracts, their podcasts, their interviews to the authors, their news and views. They still sell copies but ONLY if they factually add value.

This system solves so many problems:

- The random lottery of the peer review process is no longer

- Nobody will tell you how you have to format your paper or what words you can use in your discussion

- Everything that gets published is automatically OA

- There a no publication fees

- There is still opportunity for making money, only this time in a fair way: scientists make money when they enrol for peer-review as a service; journals still continue to exist.

- Only genuinely useful journals continue to exist: all those thousands of parasitic journals that now exist just because they are easy to publish with, will perish.

Now, this is my solution. Comments are welcome.

The alternative is: I can publish 46 papers on CNS, win the Nobel prize using those papers, become editor of a Journal (elife) that does the very same thing that CNS do and then go out on the Guardian and do my j’accuse.

Il Gruffalo

Il Gruffalo

English version by Julia Donaldson

Tradotto in Italiano da Giorgio Gilestro

Un topolino andò passeggiando per il bosco buio e pauroso

Una volpe vide il topino, che le sembrò così appetitoso

“Dove stai andando, piccolo topino?

Vieni a casa mia, che ci facciamo uno spuntino”.

“É molto gentile da parte tua, cara volpe, ma no –

Sto andando a fare pranzo con un Gruffalo”

“Un Gruffalo? E che cosa sarà mai?”

“Un Gruffalo: che non lo sai?

Ha terribili zanne

unghie storte e paurose,

e terribili denti tra le fauci pelose”

“E dove vi incontrate?”

Proprio qui, in questo posto,

e il suo cibo preferito é volpe arrosto!”

“Volpe arrosto!” La volpe urlò,

“Ti saluto, topolino” e se la filò.

“Stupida volpe! Non ci é arrivata?

Questa storia del Gruffalo, me la sono inventata!”

Continuò il topino per il bosco pauroso,

un gufo adocchiò il topo, che gli sembrò così appetitoso.

“Dove stai andando, delizioso topetto?

Vieni a prenderti un tè sopra l’albero, nel mio buchetto”

“É paurosamente gentile da parte tua, Gufo, ma no –

Il tè lo vado a prendere col Gruffalo”

“Il Gruffalo? E che cosa é mai?”

“Un Gruffalo: che non lo sai?

Ha ginocchia sbilenche,

e unghione paurose,

e sulla punta del naso, pustole velenose”

“E dove lo incontri?”

“Al ruscello, lì sul lato,

E il suo cibo preferito é gufo con gelato”

“Gufo e gelato?” Guuguu, guugoo.

Ti saluto topino!” e il gufò arruffato decollò.

“Stupido gufo! Non ci é arrivato?

Il racconto del Gruffalo, me lo sono inventato!”

Il topino continuò per il bosco pauroso,

Un serpente lo vide, é gli sembrò così appettitoso.

“Dove stai andando, piccolo topino?

Viene a un banchetto con me, in quell’angolino.”

“É terribilmente gentile da parte tua, serpente, ma no –

Al banchetto ci vado col Gruffalo!”

“Il Gruffalo? E cosa é mai?”

“Un Gruffalo! Che non lo sai?

Ha occhi arancioni,

e lingua nera e scura,

e sulla schiena: aculei violacei che fanno paura”

“Dove lo incontri?”

“Qui, al laghetto incantato,

e il suo cibo preferito é serpente strapazzato.”

“Serpente strapazzato? Lascio la scia!

Ciao ciao topolino” e il serpente scivolò via.

“Stupido serpente! Non l’ha capito?

Questo affare del Gruffalo me lo sono inventa….

TOOOOO!”

Ma chi é questo mostro

con terribili zanne

e unghie paurose,

e terribili denti tra lefauci pelose.

Ha ginocchia sbilenche,

e unghione paurose,

e sulla punta del naso, pustolone velenose.

Occhi arancioni,

e lingua nera e scura,

e sulla schiena: aculei violacei che fanno paura”

“Oh, Aiuto! Oh no!

Non é un semplice mostro: é il Gruffalo!”

“Il mio cibo preferito!” Disse il Gruffalo affamato,

“Sarai delizioso, sul pane imburrato!”

“Delizioso?” Disse il topino. “Non lo direi al tuo posto!”

Sono l’esserino più pauroso di tutto il bosco.

Passeggiamo un po’ e ti farò vedere,

come tutti gli animali corrono quando mi vedono arrivare!

“Va bene!” disse il Gruffalo esplodendo in un risatone tetro,

“Tu vai avanti che io ti vengo dietro”.

Caminnarono e camminarono, finchè il Gruffalo ammonì:

“sento come un sibilo tra quel fogliame lì”.

“É il serpente!” disse il topo “Ciao Serpente, ben trovato!”

Serpente sbarrò gli occhi e guardò il Gruffalo, imbambolato.

“Accipicchia!” disse, “Ciao ciao topolino!”

E veloce si infilò nel suo rifugino.

“Vedi”, disse il topo. “Che ti ho detto?”

“Incredibile!”, esclamò il Gruffalo esterefatto.

Camminarono ancora un po’, finchè il Gruffalo ammonì:

“sento come un fischio tra quegli alberi li’”.

“É il Gufo”, disse il topo “Ciao Gufo, ben trovato!”

Gufo sbarrò gli occhi e guardò il Gruffalo, imbabolato.

“Cavoletti!” disse “Ciao ciao topolino!”

E veloce volò via nel suo rifugino.

“Vedi?”, disse il topo. “Che ti ho detto?”

“Sbalorditivo”, esclamò il Gruffalo esterefatto.

Camminarono ancora un po’, finchè il Gruffalo ammonì:

“sento come dei passi in quel sentiero li’”.

“É Volpe”, disse il topo “Ciao Volpe, ben trovata!”

Volpe sbarrò gli occhi e guardò il Gruffalo, imbabolata.

“Aiuto!” disse, “Ciao ciao topolino!”

E come una saetta corse nel suo rifugino.

“Allora, Gruffalo”, disse il topo. “Sei convinto ora, eh’?

Hanno tutti paura e terrore di me!

Ma sai una cosa? Ora ho fame e la mia pancia borbotta.

Il mio cibo preferito é: pasta Gruffalo e ricotta!”

“Gruffalo e ricotta?!” Il Gruffalo urlò,

e veloce come il vento se la filò.

Tutto era calmo nel bosco buio e pauroso.

Il topino trovò un nocciolo: che era tanto tanto appetitoso.

Pietro loves the Gruffalo. He likes to alternate the original English version to a customized Italian one, so I came up with this translation. Only once I completed the translation, did I realize that an official Italian version was actually on sale. It’s titled “A spasso col mostro” and can be read here. I like mine better of course.

The Japanese Taxis

Someone says I have a peculiar tendency to dissect all of my experiences and place them in labelled boxes for sake of understanding. It’s possibly true and it is with the same spirit that I found myself dissecting Japan during my recent visit over there. I was invited to teach at a Summer School for Master students in Biology and Computing at the Tokyo Institute of Technology and I decided to spend a few more days sightseeing. So for those who ask what I found most striking about Japan, my answer is going to be “Taxis”.

Taxis like this are everywhere. Why are they special? Look at the car. Not sure what model may be, but one can easily bet this car was produced and sold sometime in the 80’s and then somehow time stopped and it never got old. It is perfectly polished, no tear and wear, not a single sign of ageing internally or externally. The car is fitted with improbable technological wonders, coming out from an improbable sci-fi movie from the 80’s: absolutely emblematic is a spring operated mechanism that open and closes the rear door. This taxi represents Japan for me. This is a country that has lived a huge economic boom from after the war all the way to late 80’s, with annual growth that surpassed any historical record. Then, suddenly, in 1991, met an equally dramatic economic crisis with the explosion of a giant bubble and everything stopped. Economists call the following years The Lost Decade because not much moved after the burst and let me tell you: it’s perfectly visible. To this date, Japan still didn’t really recover from the bubble.

Reaction of the Japanese bank to the 91 bubble was somehow similar to what observed nowadays in the rest of the world after the 2008 crisis: quantitative easing, continuous bailout and a rush to save banks. Bail out was so common that Japan in the 90’s was said to be “A loser heaven”. And yet, society responded very differently: unemployment rate did not really sky rocket as it is happening now pretty much everywhere else. Instead, unemployment in Japan remains one of the lowest world wide and a huge deflation took place instead. My naive gut feeling cannot help but putting these two things together but I don’t know enough to claim with certainty that these two are really consequence of each other so go look for an answer somewhere else, and let me know if I was right, please. Anyhow, what is interesting is that the fact that people maintained their job – yet with decreasing salaries – and that meant society didn’t really collapse but simply froze. And that is why travelling in Japan is like travelling back in time to the early 90’s: everything, from furniture in hotel room to cars and even clothing and fashion, stopped in 1991. Granted, it’s a technological advanced 1991, filled with wonders of the time. Remember the dash of Delorean from the Back to the Future (1985)? Yes, that is what Japan looks like. Why did thing did not get any old? Why does the Taxi above still looks mint new? A Japanese friend gave me the answer: Japan has a tradition of conservatism as opposite as consumerism. In Japan a tool that is important for your life or job, is a tool to which you must dedicate extreme care. Thus, taxi drivers polish and clean and care and caresse their cars as the Samurai took care of their swords. Paraphrasing the first shogun Tokugawa Ieyasu: “the taxi is the soul of the taxi driver”1. Here, some pictures from the trip.

1. Well, the original citation would be The Sword is the soul of the Samurai. This goes a a bit off topic but during one of my jetlegged night I found this video on the history of Samurai swords quite interesting.

Can your lab book do this?

When I was a student I used to be a disaster at keeping lab books. Possibly because they weren’t terribly useful to me since back then I had an encyclopedic memory for experimental details or possibly because I never was much of a paper guy. As I grew older my memory started to shrink (oh god, did it shrink!), I started transforming data into manuscripts and as a consequence I began to appreciate the convenience of going back 6 months in time and recover raw data. Being a computer freak, I decided to give up with the paper lab book (I was truly hopeless) and turned to digital archiving instead. As they say, to each their own!. Digital archiving really did it for me and changed enormously my productivity. One of the key factors, to be honest, was the very early adoption of sync tools like Dropbox that would let me work on my stuff from home or the office without any hassle.

As soon as I started having students, though, I realized that I needed a different system to share data and results with the lab. After a bit of experimentation that led nowhere, I can now finally say I found the perfect sharing tool within the lab: a blog content manager promoted to shared lab book (here). This is what it looks like:

This required some tweaking but I can say now it works just perfectly. If you think about it, a blog is nothing less than a b(ook) log and so what better instrument to keep a lab book log? Each student gets their own account as soon as they join they lab and day after day they write down successes and frustrations, attaching raw data, figures, spreadsheets, tables and links. Here some of the rules and guidance they need to follow. Not only can I go there daily and read about their results on my way home or after dinner, but I can quickly recall things with a click of the mouse. Also, as bonus, all data are backup’d daily on the Amazon cloud and each single page can be printed as PDF or paper if needed. As you can see in the red squares in the above picture, I can browse data by student, by day, by project name or by experiment. That means that if I click on the name of the project I get all the experiments associated to it, no matter who did them. If I click on a experimental tag (for instance PCR) I get all the PCRs run by all the people in the lab.

Except for the protocols, all contents are set to be seen only by members of the lab. However, inspired by this paper, I decided that the project will be then flagged as public as soon as the results will be published.