Lots of smoke, hardly any gun. Do climatologists falsify data?

One of climate change denialists’ favorite arguments concerns the fact that not always can weather station temperature data be used as raw. Sometimes they need to be adjusted. Adjustments are necessary in order to compensate with changes the happened over time either to the station itself or to the way data were collected: if the weather station gets a new shelter or gets relocated, for instance, we have to account for that and adjust the new values; if the time of the day at which we read a certain temperature has changed from morning to afternoon, we would have to adjust for that too. Adjustments and homogenisation are necessary in order to be able to compare or pull together data coming from different stations or different times.

Some denialists have problems understanding the very need for adjustments – and they seem rather scared by the word itself. Others, like Willis Eschenbach at What’s up with that, fully understand the concept but still look at it as a somehow fishy procedure. Denialists’ bottom line is that adjustments do interfere with readings and if they are biased toward one direction they may actually create a warming that doesn’t actually exist: either by accident or as a result of fraud.

To prove this argument they recurrently show this or that probe to have weird adjustment values and if they find a warming adjustment they often conclude that data are bad – and possibly people too. Now, let’s forget for a moment that warming measurements go way beyond meteorological surface temperatures. Let’s forget satellite measurements and let’s forget that data are collected by dozens of meteorological organizations and processed in several datasets. Let’s pretend, for the sake of argument, that scientists are really trying to “heat up” measurements in order to make the planet appear warmer than it really is.

How do you prove that? Not by looking at the single probes of course but at the big picture, trying to figure out whether adjustments are used as a way to correct errors or whether they are actually a way to introduce a bias. In science, error is good, bias is bad. If we think that a bias is introduced, we should expect the majority of probes to have a warming adjustment. If the error correction is genuine, on the other hand, you’d expect a normal distribution.

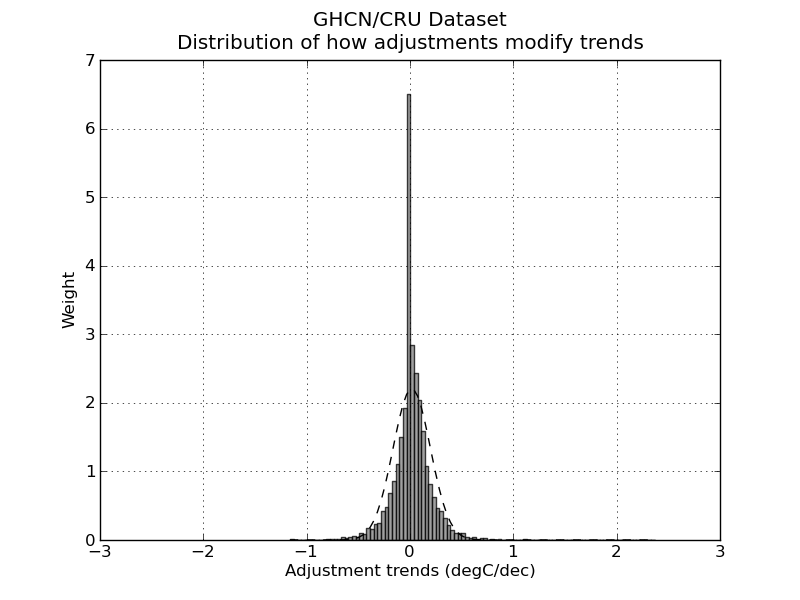

So, let’s have look. I took the GHCN dataset available here and compared all the adjusted data (v2.mean_adj) to their raw counterpart (v2.mean). The GHCN raw dataset consists of more than 13000 station data, but of these only about half (6737) pass the initial quality control and end up in the final (adjusted) dataset. I calculated the difference for each pair of raw vs adj data and quantified the adjustment as the trend of warming or cooling in degC per decade. I got in this way a set of 6533 adjustments (that is, 97% of the total – a couple of hundreds were lost in the way due to the quality of the readings). Did I find the smoking gun? Nope.

Not surprisingly, the distribution of adjustment trends2 is a quasi-normal3 distribution with a peak pretty much around 0 (0 is the median adjustment and 0.017 C/decade is the average adjustment – the planet-warming trend in the last century has been about 0.2 C/decade). In other words, most adjustments hardly modify the reading, and the warming and cooling adjustments end up compensating each other1,5. I am sure this is no big surprise. The point of this analysis is not to check the good faith of people handling the data: that is not under scrutiny (and not because I trust the scientists but because I trust the scientific method).

The point is actually to show the denialists that going probe after probe cherry-picking those with a “weird” adjustment is a waste of time. Please stop the nonsense.

Edit December 13.

Following the interesting input in the comments, I added a few notes to clarify what I did. I also feel like I should explain better what we learn from all this, so I add a new paragraph here (in fact, it’s just a comment promoted to paragraph).

How do you evaluate whether adjustments are a good thing?

To start, you have to think about why you want to adjust data in the first place. The goal of the adjustments is to modify your reading so that they could be easily compared (a) inter-probes and (b) intra-probes. In other words: you do it because you want to (a) be able to compare the measures you take today with the ones you took 10 years ago at the same spot and (b) be able to compare the measures you take with the ones your next-door neighbor is taking.

So, in short, you do want your adjustment to siginificatively modify your data – this is the whole point of it! Now, how do you make sure you do it properly? If I were to be in charge of the adjustment I would do two things. 1) Find another dataset – one that possibly doesn’t need adjustments at all – to compare my stuff with: it doesn’t have to cover the entire period, it just has to overlap enough to be used as a test for my system. The satellite measurements are good for this. If we see that our adjusted data go along well with the satellite measurements from 1980 to 2000, then we can be pretty confident that our way of adjusting data is going to be good also before 1980. There are limits, but it’s pretty damn good. Alternatively, you can use a dataset from a completely different source. If the two datasets arise from different stations, go through different processings and yet yield the same results, you can go home happy.

Another way of doing it is to remember that a mathematical adjustment is just a trick to overcome a lack of information on our side. We can take a random sample of probes and do a statistical adjustment. Then go back and look at the history of the station. For instance: our statistical adjustment is telling us that a certain probe needs to be shifted +1 in 1941 but of course it will not tell us why. So we go back to the metadata and we find that in 1941 there was a major change in the history of our weather station, for instance, war and subsequent move of the probe. Bingo! It means our statistical tools were very good in reconstructing the actual events of history. Another strong argument that our adjustments are doing a good job.

Did we do any of those things here? Nope. Neither I, nor you, nor Willis Eschenbach nor anyone else on this page actually tested whether adjustments were good! Not even remotely so.

What did we do? We tried to answer a different question, that is: are these adjustments “suspicious”? Do we have enough information to think that scientists are cooking the data? How did we test so?

Willis picked a random probe and decided that the adjustment he saw were suspicious. End of the story. If you think about it, all his post is entirely concentrated around figure 8, which simply is a plot of the difference between adjusted data and raw data. So, there is no value whatsoever in doing that. I am sorry to go blunt on Willis like this – but that is what he did and I cannot hide it. No information at all.

What did I do? I just went a step back and asked myself: is there actually a reason in the first place to think that scientists are cooking data? I did what is called a unilaterally informative experiment. Experiments can be bilaterally informative when you learn something no matter what the outcome of the experiment is (these are the best); unilaterally informative when you learn something only if you get a specific outcome and in the other case you cannot draw conclusions; not informative experiments.

My test was to look for a bias in the dataset. If I were to find that the adjustments are introducing a strong bias then I would know that maybe scientists were cooking the data. I cannot be sure about it, though, because (remember!) the whole point of doing adjustments is to change data in the first place!. It is possible that most stations suffer of the same flaws and therefore need adjustments going in the same direction. That is why if my experiment were to lead to a biased outcome, it would not have been informative.

On the other hand, I found instead that the adjustments themselves hardly change the value of readings at all and that means I can be pretty positive that scientists are not cooking data. This is why my experiment was unilaterally informative. I was lucky.

This is not a perfect experiment though because, as someone pointed out, there could be a caveat. One caveat is that in former times the distributions of probes was not as dense as it is today and since the global temperature is calculated doing spatial averages, you may overrepresent warming or cooling adjustments in a few areas while still maintaining a pretty symmetrical distribution. So, to test this you would have to check the distribution not for the entire sample as I did but grid by grid. (I am not going to do this because I believe is a waste of time but if someone wants to, be my guest).

Finding the right relationship between the experiment you are doing and the claim you make is crucial in science.

Notes.

1) Nick Stockes, in this comment, posts an R code to do exactly the same thing confirming the result.

2) What I consider here is the trend of the adjustment not the average of the adjustment. Considering the average would be methodologically wrong. This graph and this graph have both averages of adjustment 0, yet the first one has trend 0 (and does not produce warming) while the second one has trend 0.4C/decade and produces 0.4C decade warming. If we were to consider average we would erroneously place the latter graph in the wrong category.

3) Not mathematically normal as pointed out by dt in the comments – don’t do parametric statistics on it.

4) The python scripts used for the quick and dirty analysis can be downloaded as tar.gz here or zip here

5) RealClimate.org found something very similar but with a more elegant approach and on a different dataset. Again, their goal (like mine) is not to add pieces of scientific evidence to the discussion, because these tests are actually simple and nice but, let’s face it, quite trivial. The goal is really to show to the blogosphere what kind of analysis should be done in order to properly address this kind of issue, if one really wants to.

thanks. very good analysis.

though i fear the “sceptics” will ignore it…

Giorgio this is a nice analysis that shows that the adjustments appear to be near normally distributed with a mean close to zero for the station data. However this still leaves unresolved the question of how these adjustments propagate through to a global ‘average’ temperature. The geographic spread and local densities of stations is very uneven. It is still possible to have a near normal distribution of adjustments with mean close to zero that still propagates through to an overall positive correction to the global trend.

It also leaves unresolved, for me at least, the nature of the algorithm and it’s operation with regard to the adjustments. As a scientist can you tell me that there is nothing odd with the adjustments that have been highlighted for Darwin. There may be similar issues with other stations.

Now it might be that by luck we end up with a near normal distribution centred on zero (unlikley given the number of stations) or perhaps there is something inherent in the algorithms for the adjustments that [produce this distribution. I don’t know the answers.

Honestly, I don’t see how this is possible. I suppose technically it would be possible to adjust the data so to change local events, for instance making sand deserts even hotter and poles even colder. That would create alarm and yet leave average zero. But then again, you can test that if you want. The file v2.temperature.inv contains the coordinates of all stations and in the zip I link there is a text file called result.txt containing all the adjustments.

Yes, I think there is nothing odd. There are about 6000 probes out there only in the CRU dataset. A few of them will be at the extreme sides of the distribution I show here and will look suspiciously too warm or too cold. That is actually exactly what you expect, though. If I were to find anything but a normal distribution then I would actually be worried. I am pretty sure a test like this one is done and maybe even published by GHCN itself. Normality test are routine way to check for bias.

I think a more insightful analysis would identify how adjustments affect trends. Given three temperatures: 22, 23, 24; make two adjustments to yield: 24, 23, 22 and while the change fits nicely into your analysis the effect is somewhat more significant… Would you agree?

JR

@John Reynolds

Hi John – sorry I am not sure I understand what you are suggesting. I already calculated the trend of adjustment per decade so to take into account not only the steepness of the adjustment but also the duration. Is that what you are saying?

I think what I was looking for can be found in the graph labeled Mean Annual GHCN Adjustment at:

http://statpad.wordpress.com/2009/12/12/ghcn-and-adjustment-trends/

John, the relation between the Romanm analysis and GG’s is this. There are two variables – station and year. Romanm calculates a summary statistic over stations (average) and graphs it by year. GG calculates a summary stat by year (trend) and shows the distribution over stations.

GG then calculates a summary stat over stations – the average, 0.0175 C/decade. The corresponding stat for Romanm is the trend over time, and it’s best to weight this by number of stations (in each year). That comes to 0.0170 C/decade. That’s the average slope of his curve.

It’s just two ways of looking at the same data. Completely consistent.

Nick-

I think you miss the significance of Romanm’s analysis. Yes, his graph shows ~ the same average slope of 0.017 C/decade. However, by graphing this over time, you can see that this average is composed of a significant downslope prior to ~1910, followed by a significant upslope after ~1910.

In other words, the adjustments reduce the appearance of global warming before ~1910, and increase it’s appearance afterwards ( up until ~1990, at which point there is a strange drop.)

There may be valid reasons for the adjustments, and even a valid reason that they slope down before 1910 and up afterwards. But it is not enough to say that since they mostly cancel each other out then they are not significant.

Another important consideration is the spacial distribution of the adjusted data. If 1% of the total useful stations is used to describe 10% of the total area while 20% is used to describe 2%, a serious bias could be introduced while leaving the distribution of adjustments overall as you describe.

Lou

@Lou. The scenario you describe would have an influence on the GCM models where data are fed by grid so the bias should technically be calculated grid by grid. A cheat like this would be very easy to test though. It would be enough to check the distribution of the adjustment of only the last 20 years when the spacial density of stations is much higher.

We need a volunteer…

gg, your method is a nice idea, so assuming you did it correctly, it’s a good contribution. Want to repeat it for GISS and GHCN?

In case it gets lost in the thread at WUWT, I recommend you look at a similar effort, Peterson and Easterling, “The effect of artificial discontinuities on recent trends in minimum and maximum temperatures”, Atmospheric Research 37 (1995) 19-26. They look at the overall effect of GHCN homogeneity adjustments on mean trends in the entire NH, and then different regions. They see small effects for max temps on a hemisphere-wide basis; no effect for min temps. Surely somebody has published something similar more recently, but it’s what I have on hand.

Also, they note that adjustments won’t necessarily be random. For example, if many sites in a country switch from old to new thermometers at about the same time, that’s very much a non-random effect in that country at that time.

@carrot eater. Thanks for the reference. I’ll look at it. I am not surprise to see data like this are published. My all point was just to highlight the fact that if one wants to find out misbehaviour in adjustments oughta look at the big pictures, not the single probes.

Is this an evaluation of each decade of each station, or an evaluation of the overall trendline of the station data? Is there some approach here to resolve which decade the station data set is applicable? Adjustments plotted from individual stations generally indicate pre-1960 trend adjustments to effect the trend in the opposite direction than the post-1960 adjustments. Just trying to understand what presented here.

@crashexx. Overall trendline, then divided by decade. You could do it decade by decade and split the data: I’d expect to see most corrections in former times, when readings were more prone to errors.

Amazing work. As a skeptic of both sides of this debate I think the urban heating issue is overblown and that Anthony Watts should indeed release the fifteen minute plot that likely disproves the hypothesis of his whole folk science surface station project! Only a few actual cities show visually anomalous warming versus a linear trend going back ~ 300 years. That’s bad news for both sides since it’s really hard to explain why so many old cities with continuous records show neither pronounced urban heating nor a strong AGW signal (see: http://i47.tinypic.com/2zgt4ly.jpg and http://i45.tinypic.com/125rs3m.jpg). Only a few of the dozen or two very old records show excess warming in recent times. Having thus “proven” that both sides are trying to bully the slow warming trend of the centuries I hereby declare nuts. When one side wants to sell me hockey sticks made of exotic non-linear-growth wood and the other wants to sell me a conspiracy theory, I grab for my thermometers!

That said, your presentation is incomplete. You haven’t submitted the actual numerical % bias either way which is something the eye can’t measure. If there are corrections for urban heating there should be a clear bias for downward adjustment. Not being a Python user I only have your image to rely on..so..I integrated its pixels and still cannot tell if there is + or – bias since my pixelation correction is suspect.

If there is indeed no negative bias as you claim then it means urban heating is not being addressed at all, does it not? The skeptics claim that’s exactly the problem so unless you post a positive number (% bias) then you are in fact supporting their argument and only attacking straw man claims that there is a massively obvious hoax going, where in fact a very subtle and even subconscious one involving observation bias is the real suspicion.

See: http://i48.tinypic.com/2q0t47q.jpg

What’s that number?

@NikFromNYC. In the zip file I link at the end of the post there is a file called result.txt with all the adjustment. It’s CSV formatted so you can actually open it with anything you like, excel or matlab or whatever you use. From a mathematical point of view, the actual number of adjustments is not really important though, what it is important is the overall result. I checked right now, though: out of 6533 measures, 845 are = 0; 2490 0.

The simplest result is that the average of 6737 adjustments in your results.txt file (with 204 having no values calculated) is + 0.017. Over 100 years wouldn’t that turn out as 0.017 X 100 = 1.7° C warming added due to adjustment? I’m not sure exactly what your adjustment value is calculated as. I’m assuming slope (degrees/years). The vast majority of adjustments are randomly distributed as expected, but a urban heating adjustment would not be random and should never be positive (am I wrong?), so we should see a negative adjustment overall, not positive.

An added 1.7° per century warming slope is due to adjustments? Is my slope calculation correct?

What it the right way to analyze such results? Hmmm…. I’ll have to mull that over, not being a statistician. Here’s a quickie analysis.

The average + adjustment = 0.103

The average – adjustment = -0.092

Both round to 0.1. So the strength of adjustments raises no eyebrows.

Number of + adjustments = 3198 (28% more than the number of – ones!)

Number of – adjustments = 2490

Number of 0 adjustments = 845

Non-zero adjustments that are + = 56%

Non-zero adjustments that are – = 44%

If my analysis is correct, your declaration of victory was premature.

I see your histogram and raise it with a pie chart:

http://i50.tinypic.com/14ttyf5.jpg

Yet this doesn’t jibe with your histogram at all. What am I missing? Is your histogram reproducible? I’m not up to speed with Excel on making one. Ah, I misunderstood it somewhat. My linear pixel count didn’t weight the pixels with their adjustment value. Sure, there are a lot more *tiny* negative adjustments (the huge peak in the center is just to the left of 0) but there is a heavier number of bars indicating large positive adjustments than large negative ones. It’s not the big center of near neutral adjustments that matter, but the outer bars that matter and clearly, by eye, one can see that the positive side has more substance to it than the negative side. If you squint. Sorry I don’t see it as clearly now, by eye. Ah…here we go! Just erase the two center peaks which are both near-zero corrections anyway:

http://i50.tinypic.com/16m51qc.jpg

Now you don’t have to squint.

I kind of lost you with all this histogram thing.

The average is 0.017 per decade, that makes is 0.17 per century (about 8.5%).

The fact that positive adjustment outnumber negative adjustments, as I just said, doesn’t mean much because they add up to zero. I don’t know how I could explain this any further, it seems very basic math to me.

Ah…it is indeed labeled degC/dec. The argument now only amounts to the “glaring fact” that no negative correction for urban heating is evident as I had expected. Looking into it though, I believe it’s GISS that does urban heat adjustments instead of GHCN so you have indeed shown that there is no great bias, overall, in GHCN adjustments despite the fact that individual stations may show seemingly suspicious adjustments. GHCN seems to only adjust for time of observation (TOBS), missing data estimation (FILNET), station history (SHAP), and transition to electronic thermometer units (MMTS).

So I have “the number”: GHCN adjustments add a non-trivial but unsuspicious 0.17° C per century. That represents 8.5% of a “2° rise per century”.

Though positive adjustments out-number negative ones by a large margin (12%), and though the histogram presented hides this fact, the magnitude of the adjustments are quite small so the histogram is fair play.

If the magnitude of adjustment was quite large, using such a histogram to hide a 12% positive bias would be fraudulent, since it would indeed quite effectively hide it.

The only remaining conspiracy might be to retain the slope of individual stations while altering them to crowd all of the warming into the last decade or two to support AGW theory. To do that without changing the slope by more than 0.17° C per century would require the determined and self-aware logic of a whole army of devious psychopaths and could not be created through mere observation bias or rogue “over enthusiasm” that played out within the limits of TOBS, FILNET, SHAP and MMTS adjustments. Thus your work exonerates GHCN from accusations that tweaks to individual stations have been used to hide a proverbial lack of recent warming.

1) Do land air temps even tell us that much about global average temp? I don’t see how they could be called on to do much more than give a hint of a trend. If there really is such a thing an an urban heat island effect I don’t see how you could hope to adjust for that without losing your evidence for the trend.

2) I can see a way that the above analysis would be insufficient. You’ve mentioned two different processes – adjustment and “homogenization.” If I understand correctly what “homogenization” means, it seems that you should first have to calculate how much weight each station is given after the “homogenization” process. It’s not hard to imagine a scenario where systematic homogenization becomes systematic upward temperature adjustment. As I indicated in my first paragraph, average air temperatures from land readings don’t tell us that much about average global temperature to say nothing of the real thing that should be considered – average global heat.

There is also an interesting semantic question here. If a denialist accuses a climatologist of falsifying data does that mean the denialist is suggesting something nefarious? I would say no. Data can easily be falsified unwittingly by careless scientists who are unmindful of issues like confirmation bias (for more info on types of bias see Sackett DL. Bias in analytic research. J Chronic Dis. 1979;32(1-2):51-63). The question I have is: are climatologists careful scientists?

I am not going to answer points 1 and 2 because I am not sure I understood what is the link with the discussion we are having. It seems to me we moved to “thermometers are not useful anyway“.

I am going to answer to the following point, though.

Climatologists are not different from any other scientists. I am no climatologist myself, I am neurobiologist, and as I wrote in the post I don’t really care to know about the single scientists. In fact I do know that most of my bio-colleagues are not less assholes than average Joe in the street. That is not the point. You don’t have to trust scientists as people, you have to trust the scientific method. I can ensure you that if tomorrow I publish a paper about a new theory of sleep (which is what I work on) plenty of other scientists will start to work FULL TIME to try to find flaw in my theory and kick my ass because the competition in Science is bloody – money are a very limited resource + scientists tend to have big ego. The same has happened and happens every day in any other field of science, including climate change. After you know how actually difficult it is to buid a consensus, you start to appreciate also how valuable it is. What sceptics do is to forget or ignore all this.

I think a post like the one I just wrote – and the comments that arose from it – show that if the question is well posed and if the problem is well addressed, it can be actually very easy for people to come out with good tests for genuinity of data. Many sceptics lack the ability to understand what the question is and how to address it, possibly because they lack the forma mentis. What Willis Eschenbach did may even be methodologically sound but it is still pointless. If you want to test the hypothesis “are data good?” there is no point whatsoever going after single probes. If data are somehow loaded, then you would see that from the big picture, like I did.

gg, you stated, “…you have to trust the scientific method.” Excellent point! But where is the scientific method in any of this? Please show us. The scientific method is based in part upon the obligation of cooperation, and full transparency of all of the data and methodologies, and any other information used to construct a hypothesis, so that skeptical scientists [the only honest kind] can reproduce and test their experiments with the methods and data they used to arrive at the hypothesis, which claims that AGW is caused primarily by human emitted CO2.

But the purveyors of that hypothesis still adamantly refuse to cooperate with requests for data and/or methods used by the CRU, Michael Mann and others in their clique.

Throughout their leaked emails they are seen to be putting their heads together and strategizing on how to thwart legitimate requests for their data and methods, to the point that they repeatedly connive to corrupt the peer review journal system and destroy data, rather than cooperate with even one of the many dozens of lawful FOI requests.

Therefore, there is NO scientific method being practiced by the promoters of this hypothesis. There is only their public conjecture, supported by secrecy. Only their partners in crime are allowed to be privy to their information; conversely, it may be that there exists little legitimate data, and they have been winging it with cherry-picked, massaged numbers that are so corrupted that disclosing them would make these government scientists a laughingstock within the broad scientific research community.

Until the taxpayer-funded catastrophic AGW purveyors fully and transparently cooperate with others, everything they say is suspect. Their endless barking takes the place of the science they purportedly used to arrive at their questionable conclusions.

The enormous amounts of grant money being funneled to only one side of the debate [the AGW side] by numerous Foundations with an AGW agenda [Heinz, Grantham, Tides, and many others] and by billionaires has thoroughly corrupted climate science, and its associated peer review/journal process. How could it not? As you point out, scientists are people and money is a limited resource.

In Mr Eschenbach’s defense, he is as unpaid for his efforts as were Albert Einstein, Ignaz Semmelweiss, and numerous other amateur scientists. Unlike the CRU crew, Mr Eschenbach has willingly answered questions, and he has admitted when he was in error — quite unlike the mendacious Michael Mann, for example, whose hockey stick chart has been so thoroughly debunked that it can no longer used by the UN/IPCC in their assessment reports [and make no mistake: the IPCC LOVED Mann’s hockey stick chart. It was far better visually than any of the pale imitations that are now published in its place.] To this day Mann refuses to cooperate with others regarding his data and methodologies — the sign of a scientific charlatan.

You ask, “are climatologists careful scientists?” Based on the exposed internal emails and code, the answer is obviously: No. But the excuse of sloppiness gives far too much wiggle room to these conniving grant hounds. For example, this from the Harry_Read_Me file:

“Here, the expected 1990 – 2003 period is missing – so the correlations aren’t so hot! Yet, the WMO codes and station names/dates are identical (or close). What the hell is supposed to happen here? Oh, yeah – there is no ’supposed’, I can make it up. So I have.”

They are sloppy scientists. That is obvious throughout their code comments. But they are more: they are dishonest scientists who invented temperature data out of whole cloth; they made it up. And that is the reason they refuse to cooperate, as the scientific method requires. If they cooperated, their gaming of the system for money and status would be exposed to the world. So they stonewall. It is their only option.

I agree that your analysis is good, clever, and useful. I apologize for not incorporating that in my original comment.

I think the statement that confused me is this:

“Adjustments and homogenitations are necessary in order to be able to compare or pull together data coming from different stations or different times.”

I took that to mean that two separate operations were being done. I will admit I didn’t not read your entire post carefully.

On the issue of carefulness: I would prefer to trust the methods of engineers to scientists, and if we are building climate models with so much staked on their correct operation, I wonder if that is really an exercise in science or in engineering? My impression is that scientists will gamble more than engineers because they are often seeking recognition from a breakthrough or a discovery; an engineer, on the other hand must do what he does safely and economically. I am not saying that there is not science to be done to establish certain climate processes and climate numbers, but perhaps there is time to do it all carefully. Do we really know how urgent the climate crisis is? I understand that the sea is swallowing Venice but surely part of that problem is because land under Venice is subsiding and will continue to subside regardless of what happens to sea levels.

There are several types of adjustments made to the data: hommogenization, which is the combining of several records for the same station to eliminate the effects of station moves and equipment changes; TOD adjustments, which are made to account to changes in the time of day that readings are taken; and urban heat island corrections which are done by GISS, but not anyone else to my knowledge. Since gg is analyzing the GHCN data he is only looking at homogenization.

This is a very nice analysis, and is really the last word on this entire fabricated scandal.

It demonstrates beyond any reasonable doubt that there has been no data manipulation. Even if one were to persist in claiming manipulation, one would still have to accept that the average trend from CRU is less than 10% different than the average trend of the raw data. (I’ve done a similar analysis (though in a slightly different way – we’ll have a post on this on RealClimate) — and we get the same result.)

It has been claimed that ‘possible adjustments’ to the data could change our estimates of climate sensitivity. As I’ve explained elsewhere*, this is false. But even supposing it were true, it would only be a 10% adjustment of so.

Does anyone seriously think that international policies on climate change should be different because the expected temperature rise by 2100 might be 4.5 C instead of 5.0?

All of this demonstrates that global warming is real and unequivocal.

*http://www.realclimate.org/index.php/archives/2009/12/who-you-gonna-call

Eric, I don’t know of anyone who doesn’t think global warming is real and unequivocal. There may be some though. gg’s analysis is nice and shows that whether adjusted or unadjusted you would come to a very similar global average temperature. Of course this is not evidence that the adjustments are correct, simply a statement that the various adjustments that are made don’t put a large bias into the estimate of global temperature rise. Neither is it a statement that the estimate itself is correct. It may be more or less but I doubt that it is significantly different from any of the estimates out there.

For me the real questions centre on attribution and the millenial scale variability of the climate system. I think there are real debates to be had here and this is where we need to put real effort into resolving the outstanding questions. If we can make progress here then we might be able to begin to answer the climate sensitivity question and make robust estimates of temperature rises in 2100.

“I don’t know of anyone who doesn’t think global warming is real and unequivocal. There may be some though.”

Judging from what I see on the internet, there are lots of very loud people out there who doubt pretty much any and every aspect of the current theories of climate. Including this.

‘..the last word’ another closed mind from the Real Climate guys, I guess.

I do like this article and analysis – very helpful and I am glad to see a positive response from Willis Eschenbach and a further thought by Roman M

But like others have said here I don’t think there is an argument about global warming, – the argument is about Man made global warming and the implications of creating massive political and economic change which look like they will benefit big corporations in the West and will cripple developing and under developed nations.

So while Darwin is relevant it is not key, it may show some degree of ‘hiding the decline’ it may not.

And the bigger picture? Check it out here

Eric: I would be more impressed by your claims if you would publish at RC the change in temperature since 1850/1880/1900 and today for JUST THE LOCATIONS USED TO ARRIVE AT THE “GLOBAL” MEAN TEMPERATURE IN 1850/1880/1900. NO “global” data sets are legitimate that contain stations absent before 1910. All statements about “warmest ever” based on times series beginning in 1900 or before are FALSE given the absence of met. stations and temp. data from almost all of Africa and much of the tropics elsewhere before 1910. This is the mendacity of HadleyCRUT,GISS, and sadly also RC, using as baseline data a wholly unrepresentative sample of global mean temperature.

“If there is indeed no negative bias as you claim then it means urban heating is not being addressed at all, does it not?”

No, it doesn’t suggest this at all, unless more than 50% of the stations are urban (which they are not), and unless the urban heat island effect is large.

The urban heat island effect is minimal, as has been demonstrated conclusively.

There is little difference between the long-term rural and full set of all temperature trends.

Read this and the papers cited theirin.

http://www.realclimate.org/index.php/archives/2004/12/the-surface-temperature-record-and-the-urban-heat-island/

Well, this simple demonstration shows that UHI may not be as minimal as the papers claim:

A comparison of GISS data for the last 111 years show US cities getting warmer but rural sites are not increasing in temperature at all. Urban Heat Islands may be the only areas warming.

http://www.youtube.com/watch?v=F_G_-SdAN04&feature=player_embedded

Where can I get a full set of GISS station data? I went to the GISS site, but all I see is retrieval by individual station. I must be overlooking it somewhere.

GG:

You really want to show the distribution by decade. That will show if there is any “trend” baked in to the adjustment process.

Furthermore, as Gavin Schmidt pointed out, the CRU adjustments are not urban heat island adjustments anyway, they are simply homogenization adjustments. The urban heat island issue is separate.

So what NikfromNYC says is wrong, and so my response to him isn’t quite relevant.

But as I said, the UHI effect is demonstrated to be small. This is a strawman.

I posted a followup above which now quite strongly supports the authors conclusion.

GG: Indeed on the big picture. The deniers want to say “here is one station with a big adjustment, maybe they’re all like that”, but they don’t think to actually look at all of them. They just go on to the next individual station that fits their bill.

Can you clarify one thing? I thought these adjustments are applied by the NOAA (GHCN). I didn’t think CRU had anything to do with it. Does CRU use the GHCN adjustments, or have any collaboration in the process? I had been thinking they did their own separate adjustments.

gg I think you might have misinterpreted my comment about Darwin. I’m not surprised that there are stations that receive both large positive and negative adjustments. This we would expect from any 6000+ data set and a normal distribution. My comment related to the fact that for some periods there are 4 stations at Darwin, presumeably located at different sites, that record the same temperature. As a physical scientist I find it hard to justify an adjustment to the data given that 4 independent sets of temperature data in and around Darwin give the same annual averages.Now all these stations are recording temperature in a precise way, or they are all drifting at the same rate for whatever reason (instrumental, site factors etc.). The second option is the least likely.

gg I’ve now looked at the data and think that all the Darwin records relate to the same station. i.e. are multiple copies of a single data set so my point above isn’t valid.

I am interested to know though, when Darwin Airport first started. It surely wasn’t prior to 1920. How about 1935-1945 period?

I don’t know. Someone pointed out that before WWII the measurments were taken at the post office that was then bombed and destroyed during the war.

This seems to be the case. It’s also discussed in a link from Eschenbach’s original post.

According to wikipidia, the current Darwin airport was established as an air force base in 1940. This is consistent with the above. There was also a civilian airfield at Parap; this was closed in 1946 and civilian activity moved to the military location.

Eschenbach knew there was a site move, and didn’t want to adjust for it. Why, I don’t know.

Very cool analysis. I was hoping to do something similar during the Christmas break.

I will try to independently reproduce your results with my own code in the next few days.

@John. Thanks. You may want to take a couple of suggestions from the comments here too: I don’t think they add more information to the picture but sure enough they would be cool, especially the mapping one. If you use python, I read there is a module called geopy ( http://code.google.com/p/geopy/ ) that seems quite powerful.

I think at some point the analysis should be extended to include geographic weighting. It could also be partitioned in time to check for biases in different decades. There is a lot of work that could be done.

This is a great first step though. Instead of just picking individual stations it’s important to get the big picture by looking at all of them. Too many blogs are focused exclusively on the details and forget to step back and gain perspective.

No – you would not see if the data is loaded without looking at the adjustment through time.

Why not? He’s looking at the overall trend through all times.

I do agree that doing a proper spatial average would be the next step, but this result is itself informative for the question it is trying to answer.

BTW – John Reynolds pointed this possibility out first in this thread.

Paul Dennis: as a physical scientist, if you see four different records all labeled ‘Darwin Airport’ and all giving the exact same raw results, would you assume those are four different instruments? Seems quite likely to me that they’re duplicate records from the same instrument. Read over the GHCN documentation; they receive a lot of duplicate records.

Even in the off chance they are different instruments, they could be subject to the same errors (Time of observation change, upgrade in instrument, etc).

But in any case, the topic here is the big picture, not an individual station.

carrot I agree with you. If they all give identical measurements then I would be highly suspicious of the analysis, and also of GCHN quality control.

In a sense I’m playing devils advocate here because I think gg has made an interesting analysis that does not really get at the heart of the issue with respect to homegenization of data sets.

I think gg is saying that because the adjustments are normally distributed and centred close to zero then they don’t affect the overall estimation of global temperature. i.e. one could take both the adjusted and un-adjusted data and arrive at the same estimate. This does not mean that both the adjusted and unadjusted estimate are correct. What one needs to do is examine the adjustments on a case by case basis.

I do think however, before gg comes back at me over this point, that we might expect the adjustments to be normally distributed around zero. Stations may move to higher elevation, others to lower, changes in sensors might lead to normally distributed corrections around zero too. However, what we expect and what might be true may be different things. I have an open mind on this one.

carrot I’ve just looked at the Darwin plots at the NASA GISS web site and agree that they are probably the same station with multiple records, or perhaps several instruments at the same location.

Right. I’d think it more likely it’s multiple copies of the same data; even two different instruments at the same general location would give slightly different results. Somebody claiming to be from the BoM has mentioned that the station was moved around the airport grounds a couple times; perhaps there are duplicate records to show the overlap periods around the moves, I don’t know. We’ll see. All I know is that the GHCN often receives duplicate data; at some point it merges them together.

Either way, Eschenbach is sloppy here. He didn’t consider the possibility that those records were duplicates. He then wonders why adjustments were made. Well, he read how they’re made. He should have shown data from neighboring stations – a reference network. He didn’t do that, and instead just launched claims of fraud. Bizarre behaviour.

It’s as if he paid lip service to learning how homogenisation works, and then promptly forgot everything he read.

gg I’m not sure what Eschenbach did could be described as pointless.

If I understand you correctly your argument is that since the adjustments across all stations are normally distributed and centred close to zero then the overall effect on the global temperature is zero. I agree this is true if the stations are evenly distributed.

However, this is not a defense of the adjustments per se it is simply a statement that they might not affect the overall result. Now one can arrive at the right answer by the correct method, or one can arrive there by an incorrect method. However, if the method is incorrect then you have no way of knowing you’ve arrived at the right answer!

To evaluate the validity of adjustments it is necessary to look at individual stations. This is what Eschenbach has done. Now one station does not sustain the argument that these issues affect every station. But your dismissal of Eschenbachs approach as pointless, I contend, was in error.

Finally, you have demonstrated that the adjustments across the complete data set are slightly positive (0.17 deg/century) which is about 15% of the global temperature rise. This would seem to me to be significant.

“However, this is not a defense of the adjustments per se it is simply a statement that they might not affect the overall result.”

If they don’t affect the overall result, then what are skeptics jumping up and down about? Do we care about whether the global temperature trend is sound, or do we care about something unrelated?

You need only read the comments at WUWT to see what most so-called skeptics think Eschenbach’s analysis proves.

Finally, you have demonstrated that the adjustments across the complete data set are slightly positive (0.17 deg/century) which is about 15% of the global temperature rise. This would seem to me to be significant.

You left off a zero. The adjustment is 0.017 deg/century. That’s less than 2% of the observed trend.

Oops, I didn’t notice that you were talking about deg/century rather than deg/decade. My bad.

It is pointless because what do we learn from it? Eschenbach took Darwin as an exemple of ~6000 and concluded that (Fig 8 in his post) Darwin went through 4 adjustments in the last 60-70 years and they all happend to be positive? So what? What if instead of Darwin he had took BAMAKO/SENOU AIRPORT (12761291000). Also remote (Mali, Africa), also an airport, some degree of adjustment per decate only opposite sign (-0.24 instead of +0.24). This is how it’d look like:

What do we learn? That people in Africa “cheatt” to hide global warming? This is just a random station from those with symmetrical trend in the adjustment. I am sure you will be able to find extreme cases in one or the other directions.

The average adj I see is 0.017 per decade. If the trend of the 1900 is 0.19 per decade, that means not 15% but less than 10%. Must say I wouldn’t trust 0.017 as to be a faithful measure because I suppose not all stations were used: could be a bit more, bit less. If I were to do the analysis on the first place I would discard all stations on the left and right tails of the distribution if there are others more reliable to be used in the same grid.

“If the trend of the 1900 is 0.19 per decade”

Is there a typo in that sentence? Do you mean trends of the 1900s or 1990s?

Yes, there is a typo. It is 1900, meaning from 1900-2000. If I remember correctly is about 0.19 per decade isn’t it?

gg, didn’t see a reply button on your comment, so I’ll reply to myself.

If you mean global, it’s about 0.65 deg/century plotted as OLS, according to HadCRUT3, with faster rates from ~1910-1945 and from ~ 1975-2000.

http://www.woodfortrees.org/plot/hadcrut3vgl/from:1900/to:2000/plot/hadcrut3vgl/from:1900/to:2000/trend:2000/plot/none

Simple, yet excellent analysis. Not that the contrarians will care, but rational people sure will take notice.

Very interesting work here.

So, here is my understanding of what you are saying:

The problem:

Here is a good quality temperature data that shows no warming trend from Darwin, Australia. I fact, there are lots of stations in Australia that show no warming trend at all.

Despite that, after processing this data shows significant warming trend.

The solution:

There is nothing wrong with that because warming adjustments to the data are counterbalanced by symmetrical adjustments in opposite direction elsewhere.

Am I the only one who see a problem with this line of reasoning?

What you just did is showed that even if your processing assumes that 2=3 it’s OK because somewhere else it assumes that 3=2

“Urban Heat Islands may be the only areas warming.”

This is probably the stupidist statement I have ever seen on this subject.

Please explain to me how glaciers retreating on the Antarctic Peninsula know about the temperature in New York City?

You haven’t seen the teeming cities of millions, full of black parking lots, concrete buildings and air conditioners, on the Antarctic Peninsula, Siberia, and far Northern Canada?

Eric,

Have you done any infilling of missing data in your latest analysis lately? How’s the corrigendum going to your paper that showed proof of ‘unprecedented warming’ in East Antarctica going? Is it true that it was only a coincidence that the now infamous CRU code ‘read me’ file began with the name ‘Harry’?

Haven’t you had any converstions with your fellow RC colleague Michael Mann recently? He seems to think that there is a teleconnection between bristlecone pine tree ring growth in Southern California and mean global surface temperature. If thats true then why can’t glacier’s retreating on the West Antarctic peninsular be teleconnected to temperatures in New York City?

KevinUK

Big problem with this analysis. Lowering temps in the past is the sam e as raising temps recently. Either will increase the slope of warming.

Why is it so many skeptics try to attack AGW with rhetorical devices and clever sentences…rather than hard work and um, numbers?

Unless you can post up hard numbers, you are wasting everyone’s time. Its reminiscent of the Aristotelian approach to science, that the mysteries of the world can be reasoned out by just patiently thinking about them.

Newton would not be taken seriously if he didn’t have the math to back it up, same with Einstein and Fourier (who postulated the greenhouse effect). Incidentally, modern science has found minor problems with all said scientists theories. Perhaps if large business interests were threatened by Einstein’s theory of general relativity, we’d be hearing about how much of “fraud” he was since quantum mechanics had proven his theories inoperable at the sub-atomic level.

Your conclusion doesn’t follow from your data. Imagine a site that had constant temperature – if I adjust the data from 1950 by -1 and the data from 1990 by +1, I’ve created a warming trend where none existed while still having a 0-mean adjustment.

You don’t understand what was done here. It’s a distribution of trends, not a distribution of adjustments.

So in your case, there would be a warming trend, and it would appear as such above.

You’re right, I don’t understand. You’re saying the graph titled “Distribution of adjustment values” is not in fact a distribution of adjustments? I read it as saying there were (approximately) the the same number of positive as negative adjustsments – is that not what it’s saying?

No, you have created two trends, one descending and one ascending that average each other to zero so at the end you haven’t created a warming trend at all. See my answer to Mesa 4 or 5 comments below this.

Read more carefully what he did. He’s finding the trend introduced due to adjustments, not each individual adjustment. Look at the distribution – it’s of degrees/time.

An example indeed can be imagined of a linearly inclined slope having a kink put into it so the first 90 years become perfectly flat and the last 10 years super steep while retaining the exact same slope (so “adjustment” = 0).

But it’s a new level of conspiracy to keep the slope the same, one that less easily passes the laugh test since I mean once you’ve tugged at the data a bit or neglected to correct anything but upticks, would you really go to the eccentric trouble of going back and fixing the slope? Only a gatekeeper scenario might allow such coordinated fudging and I’m not sure GHCN has such a figurehead.

Besides, only 1 in 8 adjustment are zero value. They average to 0.1 magnitude (per decade) and max out at 1.5-2. So you’d have to adjust the slopes in a *coordinated* manner to achieve an overall near zero effect. Some would have to be +0.06 and then be matched by ones at -0.06 (or two at -0.03). Keeping slopes the same (“adjustment” = 0) would be relatively easy (but quite eccentric) but if you do alter the slope as the vast majority of adjustments do then how do you decide in an individual case what to adjust it to in order to hide your work? And you have to do this while adding real corrections too that limit what you can do to overall slope.

The study that would detect “coordinated slope deception” would be a graph the difference in raw vs. corrected data over time (not a slope study).

Here is someone’s plot of USHCN’s (US Historical Climate Network) adjustments:

http://cdiac.ornl.gov/epubs/ndp/ushcn/ts.ushcn_anom25_diffs_urb-raw_pg.gif

But this article is on GHCN (Global Historical Climate Network). At this point I’m lacking enough homework to know the difference. I’d like to see the relevant plot though. If it shows an incline then cognitive dissonance will rule the day.

But indeed this study doesn’t rule out fraud. It just makes it seem very unlikely for this database.

Again, the dimension of time is completely absent from this analysis. Since what we are talking about is the time series of temperature, it renders the analysis completely meaningless. The residual trend from the distribution depends on how it is ordered in time. Why is this a difficult point to grasp? It may well be that there is nothing to it, but it needs to be analyzed.

I see you keep saying that but I think you are confuse. I am taking the entire trend of the adjustment here and dividing it per decade just so to be able to do comparisons.

Think of a themormeter for which we have 100 years worth of data: from 1900 to 2000. Now imagine that this thermometer always measures 25C, constant, as in a flat line. This is your raw data.

Now, create a cooling adjustment in 1925 of -1 and then again a warming adjustment of +1 in 1975. Draw this on a piece of paper if it helps.

What is the trend of the raw data over 100 years? 0

What is the trend of the adjusted data over 100 years? 0

What is the trend of the adjustment itself? 0

There is no way you can get a total trend different than 0 if the trend of the adjustment itself is zero. OK?

The only reason why temporal data may be somehow important is because in former times when probes were not so many, the distribution of adjustment trends per grid might have been not homogeneous but we are talking little bits here.

So the raw data shows a constant 25 and after adjustment, you have a temp of 24 in 1925 and a temp of 26 in 1975. That looks like a 2 degree warming trend over 50 years, even though the individual adjustments sum to 0 and the raw data has no trend.

What am I missing?

No, you have a temperature of 24 in 1925 and then again a temperature of 25 in 1975. You do -1 (25-1=24) and then +1 (24+1=25).

Yes, you now have a temperature of 24 from 1925 until 1974, and a temperature of 25 from 1975 onward.

Now suppose this data set is observed between 1950 and 2000. Clearly, a 1 degree temperature trend will now be observed from 1950 to 2000. This is 2 degrees per century trend that was just created.

“Now, create a cooling adjustment in 1925 of -1 and then again a warming adjustment of +1 in 1975. Draw this on a piece of paper if it helps.

What is the trend of the raw data over 100 years? 0

What is the trend of the adjusted data over 100 years? 0

What is the trend of the adjustment itself? 0”

It seems to me that you are using “trend” to mean “change in average temperature over 100 years” whereas everybody else will be interpreting it to mean “change in average annual temperature over 100 years”.

If you cool 1925 and warm 1975 then, yes. the 100 year average temperature is not affected. But if you draw a “line of best fit” to the annual temperatures – which is what the IPCC refer to when they talk of a “trend” – then you will see a positive slope i.e. a warming trend.

Paul Dennis,

“Giorgio this is a nice analysis that shows that the adjustments appear to be near normally distributed with a mean close to zero for the station data. However this still leaves unresolved the question of how these adjustments propagate through to a global ‘average’ temperature.”

That question gets partial resolution here:

http://www.ncdc.noaa.gov/img/climate/research/ushcn/ts.ushcn_anom25_diffs_urb-raw_pg.gif

This is for the USHCN, which is a substantial sub component of the GHCN. Everone will note that the trend introduced by the adjustments is not ‘normally distributed and centered on zero’.

Evidently, the ‘last word on this fabricated scandal’ has yet to be spoken, True Believer’s wishes notwithstanding.

The maths involved are complex, and often do not operate intuitively. That holds true for the intuition applied to ‘adjustment distributions’ every bit as much as it does to the intuition applied to extreme homogenizations of single stations.

To get what you want, gg would have to do a spatial average. I bet it’s out there somewhere, though; it’d be pretty simple to show if you have the code for that already. Review the paper I cited by Easterling and Peterson, 1995 above – they do this calculation for the Northern Hemisphere. I’m sure somebody has done it globally more recently, but I haven’t found it yet.

But if nothing else, I think it’s safe to conclude that Eschenbach has shown absolutely nothing. He found a single station with a sizable adjustment. That’s it. He put zero effort into doing anything further – in terms of understanding why those adjustments were made, or in terms of the big picture, as we’re looking at here.

The GHCN raw dataset consists of more than 13000 stations, but of these only about half (6737) pass the initial quality control and end up in the final (adjusted) dataset.

For the sake of clarity could you explain this staement further?

Excellent work. This is the type of analysis the deniers should be doing if in fact they want to determine whether there’s some sort of systematic bias in adjusted thermometer readings. Come to think of it, how do we know they haven’t performed this type of analysis?

gg,

First of all I’m struggling with some of your basic station count numbers. Could you confirm where you downloaded your GHCN dataset from? GISS or NOAA? When did you download it. What do you count as a station? A station as given in the GHCN v2.temperature.inv file or a WMO station? Please note that the adjustments are applied to a WMO station

Also do you understand the central limit theorem? Are you aware that its perfectly possible to combine several distributions that have non zero means and none normal distributions so that when combined they approximate to a normal distribution. I think you’ve got a lot more work to do yet to reach the conclusion you have reached in your ‘big picture’ conclusion that these is ‘Lots of smoke, hardly any gun and that climatologists don’t falsify data?’. I’ve been doing my own analysis of the GHCN dataset looking for bias so I’m keen to first check that we’ve both kicked off on the same playing field before we play out the rest of the match. I know you provided Python scripts but more details as to exactly how you produced the result that goes into your histogram chart would be much appreciated.

KevinUK

I don’t think the central limit theorem’s conditions are satisfied here. I don’t think the adjustment distribution gg’s found is actually normal; it’s too leptokurtic. So working from the assumption that the central limit theorem applies strongly here is probably wrong.

Even if it did, it doesn’t imply that combining distributions with non-zero means will get you a normal distribution with a zero mean. If the “several distributions” tended to have means bigger than zero (which is what you’d expect if climatologists systematically increased the adjustments), combining them would still give you a distribution with a mean bigger than zero. The distribution gg’s pulled out is only a tiny bit bigger than zero; that’s gg’s point, as far as I can tell, and appealing to the central limit theorem doesn’t change that.

By “The distribution gg’s pulled out” I of course meant “The mean of the distribution gg’s pulled out”.

Yes dt, that is exactly my point. Thanks for clarifying it for me.

@KevinUK, I wrote in the post which files I downloaded and where from. There is link. For this kind of analysis I don’t need to care what the definition of a station is, since the goal here is to check whether scientists protocol introduces a warming bias or not so we put everything that it is both in the mean and mean_adj file, without caring too much on what it is.

[…] and Adjustment Trends Jump to Comments In his blog post, Giorgio Gilestro claims to show that the adjustments t made by GHCN to temperature data do not […]

Mesa anfd the others are right. The graph in the head cannot possibley show temporal features and the argument that the adjustments average out over time sheer arm waving.

I did some analysis on the data and posted it here:

http://statpad.wordpress.com/2009/12/12/ghcn-and-adjustment-trends/

the last graph is particularly interesting.

Thank you RomanM,

If the whole point is that adjustment produces warming,

why don’t you simply plot all stations raw vs all stations adj on the same graph.

I was going to do it myself but it seems you already have everything there and you can do it faster than me coding.

I don’t think that adjustment “produces” warming as much as exaggerates the amount. the problem is also that once you get past the genuine quality control issues, the adjustments can become ad hoc reflecting the views of the adjuster. This can affect their use in scientific studies. The graph that I posted can not possibly be merely due to the issues raised about the Darwin corrections – changes of location, altitude, etc. What is clearly seen is the lowering of temperatures in a linear fashion all the way back to the beginning of the twentieth century.

Plotting station raws versus adjusted can not indicate the total contribution of all the stations at a particular time or location.

“If the whole point is that adjustment produces warming,

why don’t you simply plot all stations raw vs all stations adj on the same graph.”

See my post above. Links to a plot that does that. Produces warming…

Very good analysis, thanks.

I see a lot of reference to Eschenbach’s work on Darwin. Here is a masterful debunk. Kudos to the journalist at the Economist who did it.

http://www.economist.com/blogs/democracyinamerica/2009/12/trust_scientists

Odd that a “journalist” wouldn’t sign it.

Anyway, “the unknown journalist” has received a response.

http://wattsupwiththat.com/2009/12/08/sticky-for-smoking-gun-at-darwin-zero/

As a rule, it has always been The Economist’s policy to not divulge the name of their correspondents. (Exceptions do exist though).

The journalist blogs as sparkleby, I cannot say if this is a “nom de plume”.

At his time, your link leads to nowhere, probably because sparkleby did expose once more why the response is also bunk. (See his comment in the blog page 4)

0.2 Degree C per decade X 10 = 2 Degree C per century.

Thanks.

I am not sure I understand why you tell us that.

Not bad for a quick and dirty analysis! I’d just like to correct one minor niggle. Judging by the plot, the adjustment distribution can’t actually be normal because it’s too leptokurtic: the peak and the tails are visibly higher than the normal distribution’s peak, and its shoulders are lower than the normal distribution’s. This doesn’t take away from your main point: the adjustments are almost symmetrically distributed about zero, although there is a modest positive skew.

Good eye dt. Wilk-Shapiro agrees with you.

No, you have a temperature of 24 in 1925 and then again a temperature of 25 in 1975. You do -1 (25-1=24) and then +1 (24+1=25).

OK, I understand the terminology a little better, but in this example you still have raw data with a slope of 0, and applied a 0 mean correction to it and wind up with a positive slope after adjustment, at least from 1925 onward. If you were looking at the adjusted graph, you’d say there has been warming since 1925 (from 24 to 25). Right?

I don’t think showing that adjustments have a zero-mean, normally distributed histogram shows that no bias is being introduced. Your criteria is necessary, but not sufficient. You’d also have to show that the adjustments are uncorrelated with time.

Actually I don’t even think that is sufficient (adjustments uncorrelated with respect to time), although it gets stronger. I think if you want to be rigorous, you ultimately need to do a sensitivity analysis to find out which stations are driving the overall trend, and then investigate the set of adjustments that were made (or not made) to those stations.

Everything else is interesting and increases credibility, but ultimately not dispositive.

It’s pretty obvious the timing of adjustments is required for this to mean anything at all. If the vast majority of cooling adjustments were well in the past (and thus warming adjustments in the recent past) then the overall trend will certainly be higher than with the raw data.

Also, exactly where did you get all of the original raw data? Per the CRU’s press release their raw data was deleted. Unless your position is that this was a very recent CRU lie, what data are you looking at that you know is raw? If you’re looking at GHCN, how do you know some of this wasn’t also adjusted prior to whatever supposedly “raw” dat set you have now. I realize there is data for which truly raw sets are available, but you are claiming to speak for the whole GHCN/CRU set, yes?

Are you also aware of the report that some of the data for the GHCN at NCDC/NOAA has recently been changed and some data previously available is gone? Is this incorrect, and if so how do you know? What other verification do you have that this is truly raw data?

On a related note, the assertion that UHI has no effect on temperature readings is patently bogus. I have a reasonably accurate thermometer in my car that consistently matches the local weather station when it is cloudy, or at night, or when the sun angle is otherwise low. (In bright daytime sun the delta from the local weather station is +2 to +5 F, and I factor this in to my further comments.) I routinely drive from suburban to urban, suburban to rural, urban to rural, etc. There is always a delta between rural and suburban and an even larger one between urban and rural. Five degress F is common, and I have noted more. This aligns with the CRN-4 rating specification for >= 2 degrees C of adjustment or worse.

The question is how much is this affecting all readings across the board. We can determine this by placing additional sensors (sited to meet CRN-1) around the current CRN-3 or worse ones and correlating. It seems to me that AGW proponents and skeptics both should be calling for this.

Eric Steig This is a very nice analysis, and is really the last word on this entire fabricated scandal.

Spoke too soon, methinks. Interesting analysis adding in the temporal dimension, RomanM.

“Spoke too soon, methinks. Interesting analysis adding in the temporal dimension, RomanM.”

Hemispheric warming… in the brain ?

Hi, I’m from Australia and I know Darwin well so I don’t have to guess as much as you guys are. (Yes, there is a systematic difference in an overlap comparison between 2 stations a few km apart, one at the airport and another at the BOM HQ, 1967-1973).

Point 1. Ask me for a realistic trend value from an Australian station and I’ll serve you a station that’s pretty close. Anything from 0 to 2 deg C per century extrapolated, with my study data covering the period 1968-2008.

Point 2. The offer goes from 0 to 2. We probably do not have a normal distribution to start with, at least on the subsets I have studied. Since GHG cannot tell the difference between sites, they should all rise in unison if GHG are causative. They do not. Very many show no change at all over the 40 years study period as does even Darwin over the term since 1885.

Point 3, in any case your analysis fails (as mine does)because you have not shown that data from countries like Australia have not already been adjusted before the USA adjusters get to work on them. Show me how you know that GHCN gets truly raw data from donor contries, please.

The proof comes when it can be shown not just that the distribution is normal, but also that the median is not displaced from its correct value. To do that, you need raw data and better algorithms than I’m reading.

Eric Steig, your ignorance preceeds you yet again. It is a “nice’ analysis, but the methods and data totally do not support the purported conclusion, just like a certain Antarctic study of recent note…

GG, pleaser correct the head post, without analysing the time dimension this is completely unrelated to any warming trend. As others have pointed out, if all the negative adjustments are, say, pre 1934, and all the positive adjustments are post 1934, you appear to claim that does not indicate the introduction of a warming trend, WTF ? What this appears to indicate is that the process used to create the “trend” occurs in equal amounts by reducing the temperature of old records and increasing those of the new, nothing more. Roman M’s graph very clearly shows the hugely significant temporal skew in adjustments.

Please explain or withdraw.

Please check my answers to Mesa and SG. If you adjust down first, then up you do NOT create a total warming trend: you create two trends one cooling and one warming that sum with each other. Why would you consider only the latter? It seems many people got stucked on this. I am going to explain it better in my post in a little while.

But people (i.e. the IPCC) don’t draw 2 trend lines, they draw 1. If you take a level line and push down on the left hand end and pull up on the right, even if by exactly the same amount, then you create A WARMING TREND overall within that period.

If you look at Roman M’s plot it becomes ever more sinister as that distribution is not just somebody leaning on the scales but doing so *carefully* so as to create just the trend that they need but not (in Dr Jones’s words) make it “look suspicious”.

No, you don’t. Again, I urge you to use paper and pencil if this can help you understand.

I am going to quote myself.

Think of a themormeter for which we have 100 years worth of data: from 1900 to 2000. Now imagine that this thermometer always measures 25C, constant, as in a flat line. This is your raw data.

Now, create a cooling adjustment in 1925 of -1 and then again a warming adjustment of +1 in 1975. Draw this on a piece of paper if it helps.

What is the trend of the raw data over 100 years? 0

What is the trend of the adjusted data over 100 years? 0

What is the trend of the adjustment itself? 0

There is no way you can get a total trend different than 0 if the trend of the adjustment itself is zero. OK?

You get two trends that compensate each other and my analysis TAKE THAT INTO ACCOUNT.

As Mesa said one comment below this: “there is no real net effect, no doubt”.

gg: I think people are worried about this possibility: The actual thermometer showed 25 C over all time, constant, but the adjustment gave 23 C at 1900-1920, 24 C at 1920-1940, 25 C at 1940-1960, 26 C at 1960-1980, and 27 C at 1980-2000.

The people are confused about your method so they think that in your analysis, all these adjustments cancel out, because it is 20 years of -2 C adjustment, 20 years of -1 C adjustment, 20 years of +1 C adjustment, 20 years of +2 C adjustment. They think all this cancels out in your plot, because they don’t understand what you plot.

That is why Roman made his plot – he plotted actually months of adjustment, not trends.

I see. Thanks for this interpretation carrot eater. Yes, this is not what I plot: it would not make sense to plot the average of the adjustment. As you said, I plot the TREND of the adjustment which in your example is (+2 – (-2))/100*10 = 0.4C / decade. So a case like the one you describe would correctly fit in the “highly warming” adjustment category because that is what it does.

I hope Roman comes back to discuss, as I think he was motivated by this confusion.

[Referring to your 11:51]

Ah, I think I see your problem – you’re assuming that the “adjustment down” applies to all data from that point rather than an “adjustment” being a time-limited deviation from the raw data.

Take your diagram and imagine all the readings pre 1920 have a degree subtracted – so 1900-1920 is 24 degrees. Then add a degree to all reading post 1980, so that period reads 26 degrees. 1920-1980 is still 25 degrees. So net adjustment is still zero; 20 years down, 20 years up; but fit a line to that data and the result is a manufactured/exaggerated warming trend over the period. That’s the suspicion, and I’m not sure your analysis is disproving that.

Giorgio:

First – thanks for your analysis. I don’t like to be int he position of commenting on others work, without doing it myself. The point is that there certianly is a non-trivial shape to the time ordered adjustment series. Yes, it goes down, then up, and averages to zero. But it creates a long upward trend over the period of time when most of the CO2 is being emmited. This may be perfectly fine, and the adjustments may be perfectly reasonable. I don’t know. But the adjustments, are not “small” compared to the temperature change over that period, so I think it would be good if everyone was comfortable with them, yes? Your graph certainly shows that from beginning to end of the whole time period there is no real net effect, no doubt. But during the period of time when we calibrate climate models for sensitivity to CO2 – there is a big effect – and it goes from lower left to upper right…..somehow this always seems to be the case with historical temperature record adjustments…I have no idea why?

You ask why would only a ‘later’ warming trend be considered? Consider how long the AGW charts ending in year 2000 were promoted in spite of the declining temperatures since then. Consider how many AGW charts have been promoted that did not show the MWP. Consider the now known discussions on how the MWP might be ‘removed’. Consider the recent GISS station temperature charts showing ‘new adjustments pushing early temperatures down and subsequent temperatures up’ and also the shorter time periods that no longer show earlier – higher temperatures. Because of this most recent revelation’ I would not be surprised if the next round of AGW charts to focus on recent history of recorded temperatures. Can you speculate on why in these instances?

My question is – Why should the hypothesis that increased CO2 is a cause of global warming be believed? It seems to be common practice for history (climate or temperature records) to be sliced and diced and then the parts that ‘fit’ ones favorite hypothesis to be retained.

I don’t know who I can believe. I am surrounded by sophists and statisticians. I am old enough to be able to say I have seen my environment warm. I tend to believe that the warming is actually a good thing. Food production is up and New York is not under water. The Arctic melts and refreezes. Antarctic ice shrinks in one area but that is offset by ice growth in other areas. Polar bear population shrinks only to grow back stronger.

I will quickly admit that I do not have a grasp on the big picture and I do resist being part of the herd as it rambles onward, toward the cliff.

You say you are confused by sophist and statisticians and I really believe you. I think actually this is a fil rouge in all the discussions I see on the climate change blog. People tend to go all over the place with their arguments and suddenly lose focus. Your comment is a good example of that, going from MWP to the fact that there is warming but it is good thing.

I believe if one is to understand something, one needs to stay focused on it. In this post I show that the adjustment do not introduce a big warming bias on the thermometer measurements. Please, let’s try to keep your focus on this.

gg, please excuse my ramblings. I was only trying to point out how I may pick and choose from an set of data to illustrate a particular point. You asked why one might consider only the later part of first a downward trend followed by an upward trend. I think you would agree there is plenty of evidence that happens.

I took your point as suggesting that if there is an equal distribution of upward and downward trends that they would by default balance out. RomanM’s analysis takes the process a step or two further and illustrates that the adjustments might be interpreted differently when looked at using the time dimension. You seem to dismiss the relevance and I extend the focus to address your question – why.

Giorgio,